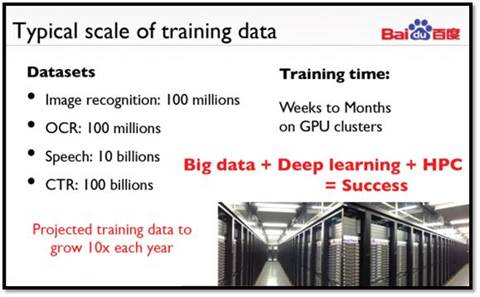

CUDA Spotlight: Ren WuBy Calisa Cole, posted May 16, 2014  GPU-Accelerated Deep LearningDr. Ren Wu is a distinguished scientist at Baidu's Institute of Deep Learning (IDL). He is known for his pioneering research in using GPUs to accelerate big data analytics and his contribution to large-scale clustering algorithms via the GPU. Ren was a speaker at the recent GPU Technology Conference (GTC14), as well as previous GTCs. He was originally featured as a CUDA Spotlight in 2011 when he worked at HP Labs and served as principal researcher in the HP Labs CUDA Research Center. Q & A with Dr. Ren WuNVIDIA: Ren, tell us about Baidu and the Institute of Deep Learning. As NVIDIA's CEO Jensen Huang pointed out in his GTC14 keynote speech, Baidu is one of the early adopters and leading innovators in the field of deep learning. Recognizing the importance of this field, Baidu formed the Institute of Deep Learning (IDL). Originally IDL was solely focused on deep learning R&D but gradually it has expanded to become Baidu's research institute for artificial intelligence. Many of our deep-learning powered products are already online and serving billions of requests every day, including (but not limited to) visual search, speech recognition, language translation, click-through-rate estimation, text search and more. Today one or two workstations with a few GPUs has the same computing power as the fastest supercomputer in the world 15 years ago, thanks to GPU computing and NVIDIA's vision. One of the critical steps for any machine learning algorithm is a step called "feature extraction," which simply means figuring out a way to describe the data using a few metrics, or "features." Because only a few of these features are used to describe the data, they need to represent the most meaningful metrics. These features have traditionally been hand crafted by experts. What's changed in the past few years is that researchers have discovered that by training large, deep neural networks with a vast set of training data, neurons emerge that look for the most salient features as a result of this computationally-intense training process. The most surprising thing is that this method of extracting features by the brute force of massive training data actually outperforms the approach of using hand-crafted features, which has been a major paradigm shift for the community. Of course, the computational cost of training on such large training sets naturally leads people to use GPUs.

I am focused on bringing in even more computing power and designing new deep learning algorithms to better utilize the computing power. We are expecting to see higher levels of intelligence and even a new kind of intelligence. We are pushing the envelope. NVIDIA: Which CUDA features and libraries do you use? We use many advanced features offered by CUDA as well as the libraries that come with it. For example, the cuBLAS library from NVIDIA is key for deep-learning based applications, since many algorithms can be mapped into dense matrix manipulation very nicely. We also use the Thrust template library, especially when we do fast prototyping. Of course we have also written our own CUDA code when necessary. NVIDIA: What challenges are you tackling? NVIDIA: Tell us more about Baidu's work in mobile image classification and recognition.

At Baidu, we are taking this approach a 'giant step' further. We have trained similar deep neural network models to recognize general classes of objects, as well as different types of objects (for example, flowers, handbags and, of course, dogs). The difference is that, in addition to being deployed in our data centers, our models can also be running inside my cell phone, and everything can be done in place without sending and retrieving data from the cloud. The ability to run models in place without connectivity is very important in many scenarios -- for example, when you travel to foreign countries or are in a remote place, where you need help but may not be connected. Furthermore, the deep neural network engine works directly on video stream, offering a true 'point and know' experience on your cell phone. NVIDIA: How will machine learning affect the average consumer's life? NVIDIA: What are you looking forward to in the next five years, in terms of technology advances?

We will see many breakthroughs in these next five years. Heterogeneous computing will become mainstream. We will continue to see much higher performance and more power efficient chips. I believe true artificial intelligence will emerge, improving people's lives through capabilities such as real-time language translation. As I mentioned at GTC14, big data + deep learning + heterogeneous computing = success! Bio for Ren WuDr. Ren Wu is a distinguished scientist at Baidu's Institute of Deep Learning (IDL). He is widely known for his pioneering research in using GPUs to accelerate big data analytics as well as his contribution to large-scale clustering algorithms via the GPU. Prior to joining Baidu, Ren served as chief software architect of Heterogeneous System Architecture (HSA) at AMD. Earlier, he was the senior scientist at HP Labs and principal researcher at the HP Labs CUDA Research Center. Ren is also known for his work in artificial intelligence and computer game theory. He was the first person to perform systematic computational research on Xiangqi (Chinese chess) endgames, making important discoveries in this domain which improved human knowledge. His Xiangqi program twice won first place in the Olympiad of Computer Chess, and was considered to be the strongest Xiangqi program in the world for many years. Relevant Links Contact Info # # # |