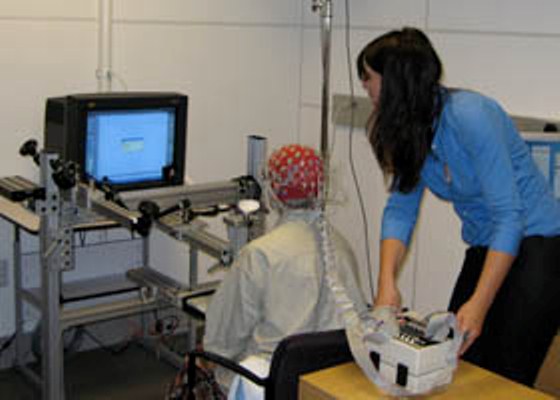

CUDA Spotlight: Adam Gazzaley By Calisa Cole, posted Sept. 10, 2013 GPU-Accelerated NeuroscienceThis week's Spotlight is on Dr. Adam Gazzaley of UC San Francisco, where he is the founding director of the Neuroscience Imaging Center and an Associate Professor in Neurology, Physiology and Psychiatry. His laboratory studies neural mechanisms of perception, attention and memory, with an emphasis on the impact of distraction and multi-tasking on these abilities. Dr. Gazzaley would like to recognize the following team members who have been instrumental in the Rhythm and the Brain project: This interview is part of the CUDA Spotlight Series. Q & A with Adam GazzaleyNVIDIA: Adam, tell us about the Rhythm and the Brain project, which you are collaborating on with former Grateful Dead drummer Mickey Hart. The ultimate goal is to improve cognition and mood in both the healthy and impaired, thus positively impacting the quality of our lives. We have already started developing a rhythm video game to be used in a study in our lab. The goal is to more accurately represent the brain sources and neural networks, as well as to perform real-time artifact correction and mental state decoding. Not only will this improve the visualization capabilities (giving Mickey more accurate recordings for his concerts), but more importantly, it will move EEG closer to being a real-time scientific tool. Then we can use it for a host of new studies in our lab, including neurofeedback and closed-loop brain stimulation. Where CUDA and the GPU really excel is with very intense computations that use large matrices. We generate that type of data when we’re recording real time brain activity across many electrodes. There is a lot of potential with this type of high-power technology.

Adam: We primarily use Python, MATLAB and C/C++. Our software is routinely executed on a range of platforms, including Linux (running Fedora 18), Windows 7, and Mac OS (Snow Leopard and Lion). Hardware we currently make use of includes NVIDIA Tesla K20s (for calculations), NVIDIA Quadro 5000s (for visualization) and two Intel Quad-core CPUs. We use Microsoft Visual Studio 2010 x64 with CUDA 5.0, with the TCC driver for the Tesla GPUs. The Nvidia Nsight debugging tools are used with Visual Studio to optimize the code performance and get a better idea of what is happening 'under the hood' of the GPUs in real time. In general, we found that the CUDA convex optimization implementations scale very well, and run significantly faster than both sequential C++ and MATLAB. The fact that there is a fast interface between MATLAB and CUDA means that we have a number of viable options when it comes to development, and can selectively export the heavy lifting to the GPU. CUDA has proved invaluable in accelerating these processes. In particular, we have developed a CUDA implementation of the Alternating Direction Method of Multipliers (ADMM) algorithm (Boyd et al, 2011) for distributed convex optimization. Solutions to many biophysical inverse problems we deal with (source localization, dynamical system identification, etc.) can be approximated by use of regularized and/or sparse linear regression. ADMM is well-suited for such problems, and with CUDA we can substantially scale up the size/complexity of the models while maintaining performance suitable for real-time analysis. Also, our engineering team has written hybrid CPU/GPU implementations of algorithms useful for directed acyclic graphs. Since many brain-related data sets can be modeled as graphs, there is much new work that can and will be done in this area. The libraries used were cuBLAS, cuSPARSE and Thrust. For the sensor-based EEG work, the matrices tend to be dense so cuBLAS was preferred. The cuSPARSE library was used to convert some of the native MATLAB sparse formats into the cuBLAS dense formats, because that was the fastest method of format conversion available. We did have to write a number of custom CUDA C++ algorithms for our work, like a dense Cholesky factoring kernel. To achieve this goal, we must optimize very large numbers of parameters, which can be computationally prohibitive on ordinary serial computing architectures. With increased computing power afforded by parallel computing architectures, we can dramatically scale up the complexity of our models -- both in terms of the number of model parameters as well as the computational complexity of the algorithms used to find an optimal solution -- without sacrificing real-time processing capabilities. Much of the real-time signal processing and machine learning we are developing for EEG can be extended to modeling and visualizing brain dynamics from this much richer source of information. Of course, with the increased data dimensionality, the complexity of our models will likewise increase (consider the challenge of learning the parameters of a neural network with 1 million "neurons" versus a network with only 10 neurons). Powerful computing capabilities will be essential for making such inferences tractable, particularly for real-time monitoring. A further consideration is the use of "Big Data" -- electrophysiological and behavioral data collected from a large number (hundreds, or thousands) of individuals -- to obtain informative priors and constraints that can improve modeling of a single individual's brain dynamics and activity, as well as improve decoding of one's mental states, detection of clinical pathology and brain disease, and more. Analyzing and modeling such large amounts of data also poses a major computational challenge, which can be addressed by more powerful computing capabilities. However, these algorithms must be capable of operating efficiently, producing accurate inferences of complex models with minimal processing delay. This is a computing challenge which can be addressed by advances both in distributed optimization algorithms as well as in parallel computing architectures. The performance of the Kepler generation of GPUs has been impressive, and fortunately there is a helpful community of CUDA developers who actively share source code and advice. Nothing worthwhile is easy, but challenges are better overcome by the collaborative open-source software development model. To truly understand the brain -- both in health and in disease -- we have to be able to track changes in such distributed activity on the time scale at which they are occurring. Furthermore, the ability to measure and model these dynamics in "real world" settings, outside artificially constrained laboratory environments, is critical to improving our understanding of natural human brain function and its relationship to cognition and behavior. Further still, the ability to make such inferences in "real-time" is of immense value for practical applications of brain monitoring, including medical diagnostics and intervention, human-machine interfaces and neural prosthetics, and even social interaction and entertainment. In our lab, we are now attempting to integrate real-time neural EEG data with adaptive video game mechanics, neurofeedback algorithms and transcranial electrical stimulation to create a closed-loop system to accelerate the time-course of learning and repair. The hardware and software tools necessary to achieve these goals are rapidly advancing, and improvements in computing capabilities is a key force in driving this advancement. Taken together, advances over the next decade in high-resolution, real-time brain monitoring, will have a profound positive impact on future technologies in multiple domains, from redefining medicine and personalized health care to transforming the way in which humans interact with each other and with machines in our lives. Bio for Dr. Adam GazzaleyDr. Adam Gazzaley obtained an M.D. and a Ph.D. in Neuroscience at the Mount Sinai School of Medicine in New York, completed clinical residency in Neurology at the University of Pennsylvania, and postdoctoral training in cognitive neuroscience at UC Berkeley. He is the founding director of the Neuroscience Imaging Center at the UC San Francisco, an Associate Professor in Neurology, Physiology and Psychiatry, and Principal Investigator of a cognitive neuroscience laboratory. His laboratory studies neural mechanisms of perception, attention and memory, with an emphasis on the impact of distraction and multitasking on these abilities. His unique research approach utilizes a powerful combination of human neurophysiological tools, including functional magnetic resonance imaging (fMRI), electroencephalography (EEG) and transcranial stimulation (TES). A major accomplishment of his research has been to expand our understanding of alterations in the aging brain that lead to cognitive decline. His most recent studies explore how we may enhance our cognitive abilities via engagement with custom designed video games, neurofeedback and TES. Dr. Gazzaley has authored over 80 scientific articles, delivered over 300 invited presentations around the world, and his research and perspectives have been consistently profiled in high-impact media, such as The New York Times, New Yorker, Wall Street Journal, TIME, Discover, Wired, PBS, NPR, CNN, NBC Nightly News, and most recently, Nature Magazine. Recently, he wrote and hosted the nationally televised, PBS-sponsored special “The Distracted Mind with Dr. Adam Gazzaley”. Awards and honors for his research include the Pfizer/AFAR Innovations in Aging Award, the Ellison Foundation New Scholar Award in Aging, and the Harold Brenner Pepinsky Early Career Award in Neurobehavioral Science.Relevant Links Contact Info |