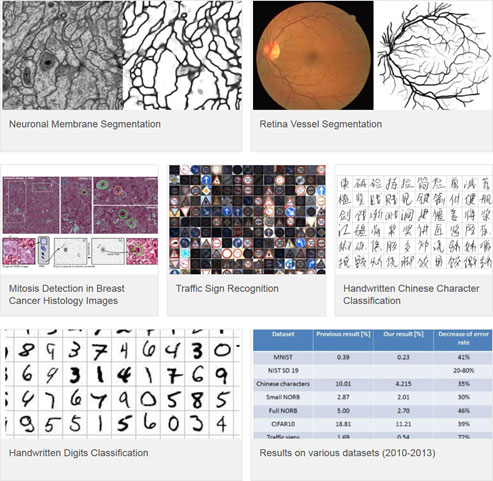

CUDA Spotlight: Dan CiresanBy Calisa Cole, posted Apr. 25, 2014  GPU-Accelerated Deep Neural NetworksThis week's Spotlight is on Dr. Dan Ciresan, a senior researcher at IDSIA in Switzerland and a pioneer in using CUDA for Deep Neural Networks (DNNs). His methods have won five international competitions on topics such as classifying traffic signs, recognizing handwritten Chinese characters, segmenting neuronal membranes in electron microscopy images, and detecting mitosis in cancer histology images. IDSIA (Istituto Dalle Molle di Studi sull'Intelligenza Artificiale) is a research institute for artificial intelligence, affiliated with both the University of Lugano and the University of Applied Sciences of Southern Switzerland. This interview is part of the CUDA Spotlight Series. [Editor's Note: Dan presented a session on Deep Neural Networks for Visual Pattern Recognition at the GPU Technology Conference in March 2014.] Q & A with Dan CiresanNVIDIA: Dan, tell us about your research at IDSIA.

NVIDIA: Why is Deep Neural Network research important?

NVIDIA: In what way is GPU computing well-suited to DNNs? Training such a network is difficult on normal CPU clusters because during training there is a lot of data communication between the units. When the entire network can be put on a single GPU instead of a CPU cluster, everything is much faster: communication latency is reduced, bandwidth is increased, and size and power consumption are significantly decreased. One of the simplest but also the best training algorithms, Stochastic Gradient Descent, can run up to 40 times faster on a GPU as compared to a CPU.

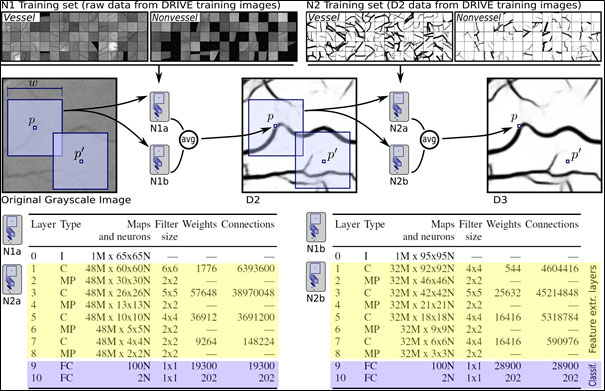

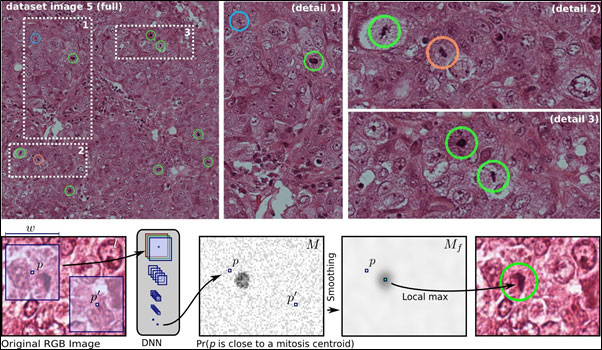

Blood vessel segmentation in retinal images NVIDIA: How many GPUs are you currently using for a given training run? NVIDIA: What is the size of training data you're working with? How long does it typically take to train? NVIDIA: Roughly speaking, how many FLOPS does it take to train a typical DNN? NVIDIA: Are you continuing to see better accuracy as the size of your training sets increase? Datasets are becoming bigger mostly because they have far more classes (categories). Several years ago datasets had on average tens of classes with up to tens of thousands of samples per class. Now we are tackling problems with thousands or even tens of thousands of classes. Discerning between so many classes is difficult because many of them tend to be very similar. This is why we need sufficient samples in each class, thus a huge amount of labeled samples. NVIDIA: What challenges did you face in parallelizing the algorithms you use? NVIDIA: Which CUDA features and GPU libraries do you use? In 2008, when I started to implement my DNN there were no dedicated libraries. CUBLAS could be used for simple networks like Multi-Layer Perceptrons, but not for Convolutional NNs. Having no other option, I implemented all my code directly in CUDA. NVIDIA: Tell us about the MICCAI 2013 Grand Challenge on Mitosis Detection.

Mitosis detection in breast cancer histology images Mitosis detection is important for cancer prognosis, but difficult even for experts. In 2012 our DNN won the ICPR 2012 Contest on Mitosis Detection in Breast Cancer Histological Images. The MICCAI 2013 Grand Challenge had a far more challenging dataset: more subjects, higher variation in slide staining, and difficult and ambiguous cases. Fourteen research groups (universities and companies) submitted results. Our entry was by far the best (26.5% higher F1-score than the second best entry). NVIDIA: Do you see DNNs being used for applications beyond visual pattern recognition? NVIDIA: When did you first see the promise of GPU computing? In 2008 I was in the last year of my Ph.D. program and I was running experiments on 20 computers for my thesis. Even so, I had to use small networks because I had limited time to train them. Then my Ph.D. adviser bought a GTX 280 the week it was released. I spent much of that summer optimizing my code in CUDA. That was the beginning of the first huge convolutional DNN. NVIDIA: What will we see in the next five to ten years with DNNs? DNNs will have a huge impact in the bio-medical field. They will be used for developing new drugs (e.g. automatic screening), as well as for disease prevention and early detection (e.g. glaucoma, cancer). They will help improve our understanding of the human brain. Right now they are the best candidate for the initial stage of building the connectome. In turn, we will use those insights into the physiology of the brain to improve upon the systems that are currently being used to understand the brain. And, DNNs will finally bring autonomous cars on the streets. DNNs are more robust than current non-NN methods. Most autonomous cars are relying exclusively on LIDAR & RADAR acquired information. DNNs can be used both to analyze that 3D information and even replace or enhance LIDAR with normal, simple vision-based navigation. Bio for Dan CiresanDr. Dan Ciresan received his Ph.D. from "Politehnica" University of Timisoara, Romania. He first worked as a postdoc before becoming a senior researcher at IDSIA, Switzerland. Dr. Ciresan is one of the pioneers of using CUDA for Deep Neural Networks (DNNs). His methods have won five international competitions on topics such as classifying traffic signs, recognizing handwritten Chinese characters, segmenting neuronal membranes in electron microscopy images, and detecting mitosis in breast cancer histology images. Dr. Ciresan has published his results in top-ranked conference proceedings and journals. His DNNs have significantly improved the state of the art on several image classification tasks. Honors and Awards:

Relevant Links Contact Info # # # |