PhysX

NVIDIA PhysX® is an open source, scalable, multi-platform physics simulation solution supporting a wide range of devices, from smartphones to high-end multicore CPUs and GPUs.

The powerful SDK brings high-performance and precision accuracy to industrial simulation use cases from traditional VFX and game development workflows, to high-fidelity robotics, medical simulation, and scientific visualization applications.

Get PhysX In Omniverse Get PhysX On Github

Experience Powerful, Flexible Simulation

Unified Solver

PhysX provides a wide range of new features including FEM soft body simulation, cloth, particles, and fluid simulation with two way coupled interaction under a unified solver framework.

Scalability

PhysX offers a highly scalable simulation solution for gaming, robotics, VFX, and more. It provides a simulation that can be run on a wide range of platforms ranging from low-power mobile CPUs through to high-end GPUs, including a new GPU API targeting end-to-end GPU-based reinforcement learning.

Quality and Assurance

Through collision detection and the solver, PhysX offers simulation stability for more robust stacking and joints. PhysX also includes momentum conservation for the articulation system and gyroscopic forces in the rigid body system.

Physics Simulation SDK

NVIDIA PhysX core software developer kit (SDK) includes PhysX, Blast, and Flow.

PhysX

PhysX is available in NVIDIA Omniverse and as a BSD3 open source release, including all CPU source code and GPU binaries.

Blast

The NVIDIA PhysX SDK includes Blast, a destruction and fracture library designed for performance, scalability, and flexibility.

Flow

Flow enables realistic combustible fluid, smoke, and fire simulations. Flow is part of the PhysX SDK.

NVIDIA PhysX SDK Features Overview

| Implemented on: | ||

|---|---|---|

| CPUs | NVIDIA GPUs | |

| Rigid Body Dynamics | ✔ | ✔ |

| Scene Query | ✔ | |

| Joints | ✔ | ✔ |

| Reduced Coordinate Articulations | ✔ | ✔ |

| Vehicle Dynamics | ✔ | |

| Character Controllers | ✔ | |

| Soft Body Dynamics (Finite Element Method) | ✔ | |

| PBD (liquid/cloth/inflatable/shape matching) | ✔ | |

| Custom Geometries | ✔ | |

| Blast - distributed with PhysX | ✔ | ✔ |

| Flow - distributed with PhysX | ✔ | |

Key PhysX Features

Rigid Body Dynamics

Study the movement of multi-body interactions under external forces, such as gravity. PhysX provides industry-proven scalable rigid body simulation on both CPU and GPU.

Scene Query

Perform spatial queries against the simulated world to permit perception and reasoning in a simulated environment. Combined with flexible filtering mechanisms, PhysX provides support for raycast, overlap, and sweep queries against the entire world or individual bodies.

Joints

Joints constrain the way bodies move relative to one another. PhysX provides a suite of common built-in joint types and supports custom joints through a flexible callback mechanism.

Reduced Coordinate Articulations

Reduced coordinate articulations provide a linear-time, guaranteed joint-error-free simulation of a tree of rigid bodies. PhysX's implementation closely matches analytical models.

Vehicle Dynamics

PhysX provides accurate and efficient simulation of vehicles, including tire, engine, clutch, transmission, and suspension models.

Character Controllers

PhysX provides a kinematic character controller that permits an avatar to navigate a simulated world. It supports rich interactions with both static and dynamically simulated bodies.

Soft Body Dynamics

Finite Element Method (FEM) soft bodies simulate measurable properties of hyperelastic materials to form an accurate and efficient model of elastic deformable bodies.

SDF Colliders

A new Signed Distance Field based collision representation allows PhysX to simulate non-convex shapes like gears and cams without convex decomposition.

Position Based Dynamics

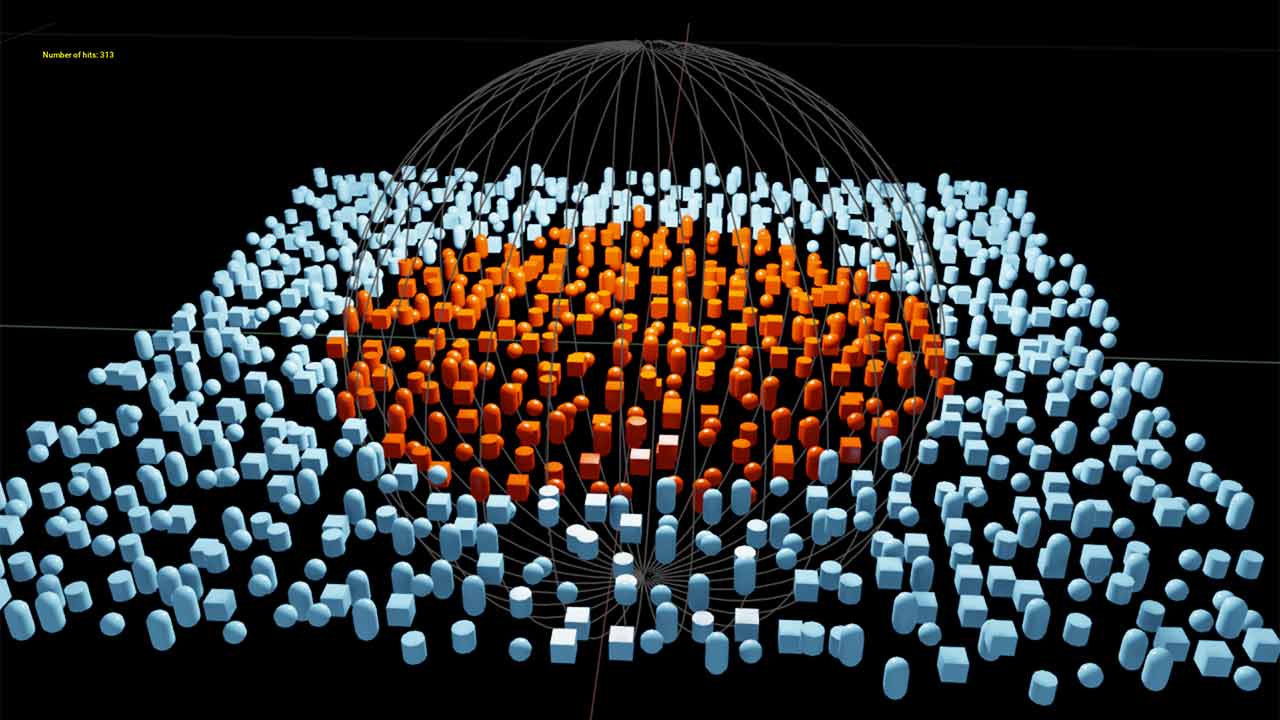

Position Based Dynamics provide a flexible framework for simulating a wide range of phenomena including liquids, granular materials, cloth, rigid bodies, deformable bodies, and more. It is used extensively in the VFX industry.

Custom Geometry

PhysX provides a wide range of built-in geometries and, additionally, provides a flexible callback mechanism to allow the application to introduce their own geometry types into the simulation.

Industrial Applications for Features

| Autonomous Vehicles | Game Development | HPC Visualization | Industrial Manufacturing | Industrial Applications | Robotics | VFX and Media | |

|---|---|---|---|---|---|---|---|

| Rigid Body Dynamics (TGS or PGS solver) | ✔ | ✔ | ✔ | ✔ | ✔ | ||

| Scene Query | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ |

| Joints | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | |

| Articulations | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ |

| Vehicle Dynamics | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | |

| Character Controllers | ✔ | ✔ | ✔ | ✔ | |||

| Soft Body Dynamics | ✔ | ✔ | ✔ | ✔ | ✔ | ||

| Position Based Dynamics | ✔ | ✔ | ✔ | ✔ | |||

| Flow | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | |

| Blast | ✔ | ✔ | ✔ |

See PhysX in Action

Latest PhysX News

NVIDIA Omniverse

NVIDIA Omniverse™ is a scalable, multi-GPU real-time reference development platform for building and operating metaverse applications. Creators, designers, researchers, and engineers can accelerate their workflows with one-click interoperability between leading software tools in a true-to-reality shared virtual world.

Physically Accurate Simulation

Omniverse is a platform built from the ground up to be physically based and integrated with core technologies including MDL for materials, PhysX, Flow, and Blast for physics, and RTX technology for real time ray and path tracing. Omniverse features several core apps tailored to accelerate specific workflows.

Explore Omniverse

Develop Tools on Omniverse

Unlike monolithic development platforms, Omniverse was designed to be easily extensible and customizable with a modular development framework. Developers can easily build extensions, apps and microservices using Omniverse Kit.

Develop on Omniverse

Get Started on Omniverse

Start Building

Access all the developer resources you’ll need to start building on Omniverse, including free tutorials, documentation, and our beginner’s training to get started with USD.

Get Started

Become an Omnivore

Join our community! Attend our weekly live streams on Twitch and connect with us on Discord and our forums.

Streaming Calendar

Get Technical Support

Having trouble? Post your questions in the forums for quick guidance from Omniverse experts, or refer to the platform documentation.

Forums

Live Training Sessions

Want to dive deeper into NVIDIA Omniverse? Attend a live training with a certified instructor from FMC.

Register Today

Discord

Discord Instagram

Instagram LinkedIn

LinkedIn Medium

Medium Twitch

Twitch Twitter

Twitter Youtube

Youtube