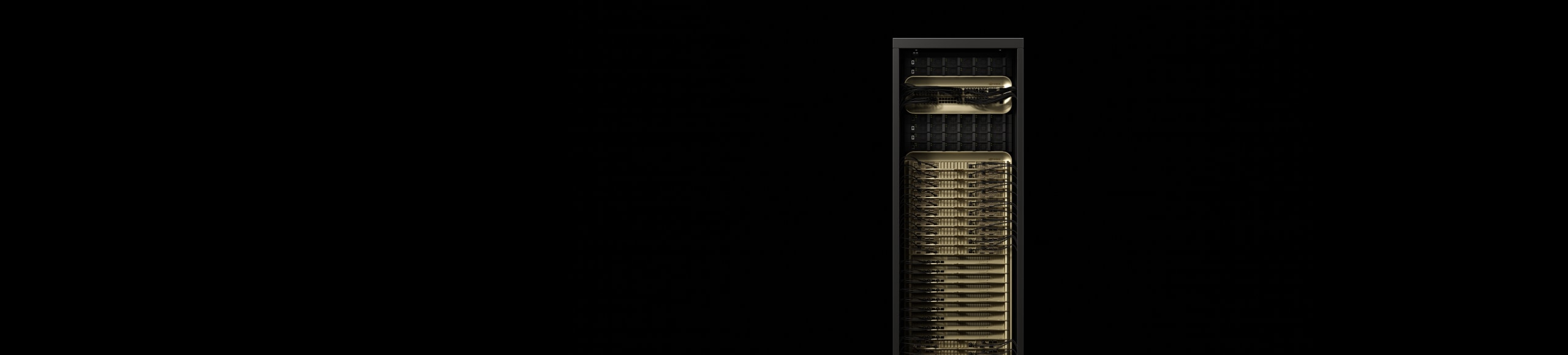

NVIDIA GB200 NVL72

Powering the era of accelerated computing.

Overview

Unlocking Real-Time Trillion-Parameter Models

The NVIDIA GB200 NVL72 connects 36 Grace CPUs and 72 Blackwell GPUs in a rack-scale, liquid-cooled design. It boasts a 72-GPU NVIDIA NVLink™ domain that acts as a single, massive GPU and delivers 30x faster real-time trillion-parameter large language model (LLM) inference, with 10x greater performance for mixture-of-experts (MoE) architectures.

The GB200 Grace Blackwell Superchip is a key component of the NVIDIA GB200 NVL72, connecting two high-performance NVIDIA Blackwell Tensor Core GPUs and an NVIDIA Grace™ CPU using the NVLink-C2C interconnect to the two Blackwell GPUs.

Highlights

Supercharging Next-Generation AI and Accelerated Computing

LLM inference and energy efficiency: TTL = 50 milliseconds (ms) real time, FTL = 5s, 32,768 input/1,024 output, NVIDIA HGX™ H100 scaled over InfiniBand (IB) vs. GB200 NVL72, training 1.8T MOE 4096x HGX H100 scaled over IB vs. 456x GB200 NVL72 scaled over IB. Cluster size: 32,768

A database join and aggregation workload with Snappy / Deflate compression derived from TPC-H Q4 query. Custom query implementations for x86, H100 single GPU and single GPU from GB200 NLV72 vs. Intel Xeon 8480+

Projected performance subject to change.

NVIDIA GB200 NVL4

NVIDIA GB200 NVL4 unlocks the future of converged HPC and AI, delivering revolutionary performance through a bridge connecting four NVIDIA NVLink Blackwell GPUs unified with two Grace CPUs over NVLink-C2C interconnect. Compatible with liquid-cooled NVIDIA MGX™ modular servers, it provides up to 2x performance for scientific computing, AI for science training, and inference applications over the prior generation.

Features

Technological Breakthroughs

AI Factory for the New Industrial Revolution

Specifications

GB200 NVL72 Specs¹

| GB200 NVL72 | GB200 Grace Blackwell Superchip | |

| Configuration | 36 Grace CPU | 72 Blackwell GPUs | 1 Grace CPU | 2 Blackwell GPU |

| NVFP4 Tensor Core2 | 1,440 | 720 PFLOPS | 40 | 20 PFLOPS |

| FP8/FP6 Tensor Core2 | 720 PFLOPS | 20 PFLOPS |

| INT8 Tensor Core2 | 720 POPS | 20 POPS |

| FP16/BF16 Tensor Core2 | 360 PFLOPS | 10 PFLOPS |

| TF32 Tensor Core2 | 180 PFLOPS | 5 PFLOPS |

| FP32 | 5,760 TFLOPS | 160 TFLOPS |

| FP64 / FP64 Tensor Core | 2,880 TFLOPS | 80 TFLOPS |

| GPU Memory | Bandwidth | 13.4 TB HBM3E | 576 TB/s | 372 GB HBM3E | 16 TB/s |

| NVLink Bandwidth | 130 TB/s | 3.6 TB/s |

| CPU Core Count | 2,592 Arm® Neoverse V2 cores | 72 Arm Neoverse V2 cores |

| CPU Memory | Bandwidth | 17 TB LPDDR5X | 14 TB/s | Up to 480 GB LPDDR5X | Up to 512 GB/s |

|

1. Specification in sparse | dense. |

||

NVIDIA GB300 NVL72

The NVIDIA GB300 NVL72 features a fully liquid-cooled, rack-scale architecture that integrates 72 NVIDIA Blackwell Ultra GPUs and 36 Arm®-based NVIDIA Grace™ CPUs into a single platform, purpose-built for test-time scaling inference and AI reasoning tasks. AI factories accelerated by the GB300 NVL72—leveraging NVIDIA Quantum-X800 InfiniBand or Spectrum-X Ethernet, ConnectX-8 SuperNICs, and NVIDIA Mission Control management—deliver up to a 50x overall increase in AI factory output performance compared to NVIDIA Hopper-based platforms.