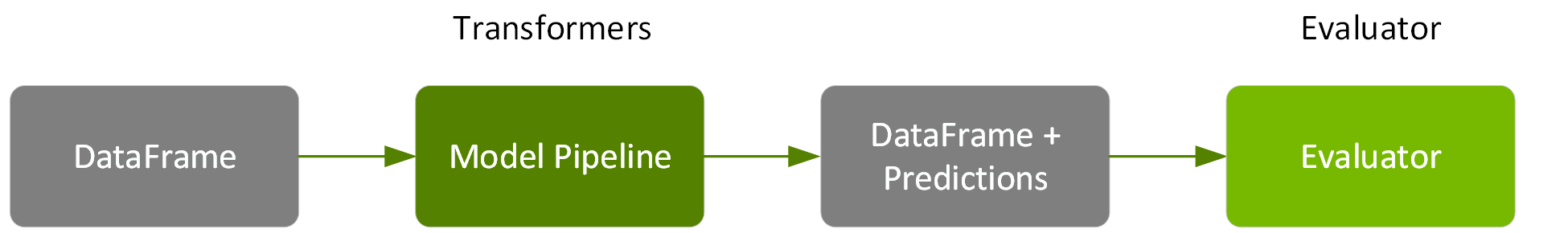

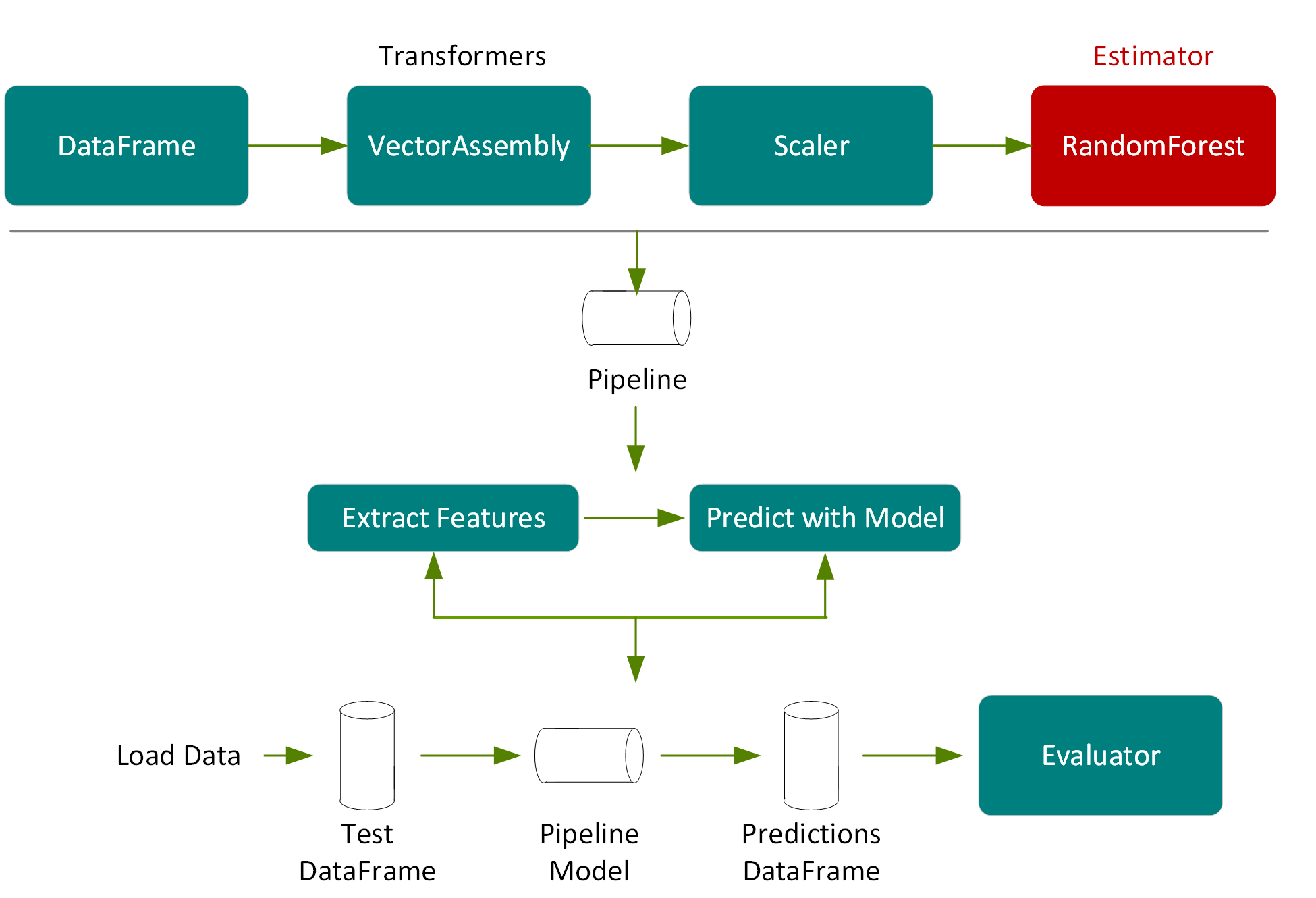

Next we use the test DataFrame, which was a 20% random split of the original DataFrame, and was not used for training, to measure the accuracy of the model.

In the following code we call transform on the pipeline model, which will pass the test DataFrame, according to the pipeline steps, through the feature extraction stage, estimate with the random forest model chosen by model tuning, and then return the predictions in a column of a new DataFrame.

val predictions = pipelineModel.transform(testData)

predictions.select("prediction", "medhvalue").show(5)

result:

+------------------+---------+

| prediction|medhvalue|

+------------------+---------+

|104349.59677450571| 94600.0|

| 77530.43231856065| 85800.0|

|111369.71756877871| 90100.0|

| 97351.87386020401| 82800.0|

+------------------+---------+

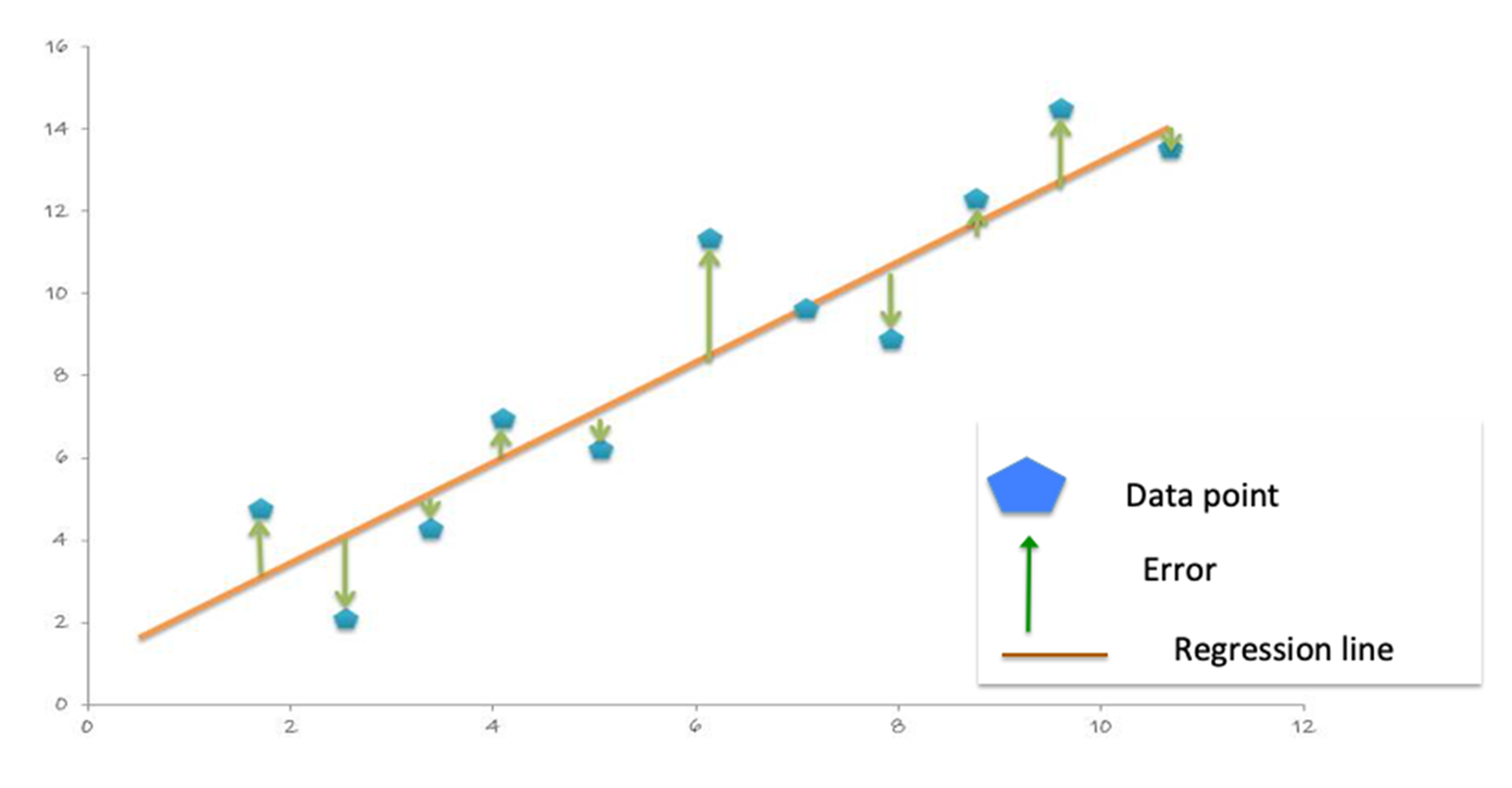

With the predictions and labels from the test data, we can now evaluate the model. To evaluate the linear regression model, you measure how close the predictions values are to the label values. The error in a prediction, shown by the green lines below, is the difference between the prediction (the regression line Y value) and the actual Y value, or label. (Error = prediction-label).

The Mean Absolute Error (MAE) is the mean of the absolute difference between the label and the model’s predictions. The absolute removes any negative signs.

MAE = sum(absolute(prediction-label)) / number of observations).

The Mean Square Error (MSE) is the sum of the squared errors divided by the number of observations. The squaring removes any negative signs and also gives more weight to larger differences. (MSE = sum(squared(prediction-label)) / number of observations).

The Root Mean Squared Error (RMSE) is the square root of the MSE. RMSE is the standard deviation of the prediction errors. The Error is a measure of how far from the regression line label data points are and RMSE is a measure of how spread out these errors are.

The following code example uses the DataFrame withColumn transformation, to add a column for the error in prediction: error=prediction-medhvalue. Then we display the summary statistics for the prediction, the median house value, and the error (in thousands of dollars).

predictions = predictions.withColumn("error",

col("prediction")-col("medhvalue"))

predictions.select("prediction", "medhvalue", "error").show

result:

+------------------+---------+-------------------+

| prediction|medhvalue| error|

+------------------+---------+-------------------+

| 104349.5967745057| 94600.0| 9749.596774505713|

| 77530.4323185606| 85800.0| -8269.567681439352|

| 101253.3225967887| 103600.0| -2346.677403211302|

+------------------+---------+-------------------+

predictions.describe("prediction", "medhvalue", "error").show

result:

+-------+-----------------+------------------+------------------+

|summary| prediction| medhvalue| error|

+-------+-----------------+------------------+------------------+

| count| 4161| 4161| 4161|

| mean|206307.4865123929|205547.72650805095| 759.7600043416329|

| stddev|97133.45817381598|114708.03790345002| 52725.56329678355|

| min|56471.09903814694| 26900.0|-339450.5381565819|

| max|499238.1371374392| 500001.0|293793.71945819416|

+-------+-----------------+------------------+------------------+

The following code example uses the Spark RegressionEvaluator to calculate the MAE on the predictions DataFrame, which returns 36636.35 (in thousands of dollars).

val maevaluator = new RegressionEvaluator()

.setLabelCol("medhvalue")

.setMetricName("mae")

val mae = maevaluator.evaluate(predictions)

result:

mae: Double = 36636.35

The following code example uses the Spark RegressionEvaluator to calculate the RMSE on the predictions DataFrame, which returns 52724.70.

val evaluator = new RegressionEvaluator()

.setLabelCol("medhvalue")

.setMetricName("rmse")

val rmse = evaluator.evaluate(predictions)

result:

rmse: Double = 52724.70