Introducing NVIDIA Reflex: Optimize and Measure Latency in Competitive Games

Today, 73% of GeForce gamers play competitive multiplayer games, or esports. The most watched esport, League of Legends, drew over 100M viewers for its 2019 championship -- more than last year’s NFL Superbowl. With esports rivaling traditional sports in both viewership as well as playtime, it’s more important than ever for gamers to have their PC and graphics hardware tuned to allow them to play their best. This is why several years ago NVIDIA invested in an esports laboratory staffed by NVIDIA Research scientists, dedicated to understanding both player and hardware performance in esports. Today, we are excited to share with you the first major fruits of this research.

Alongside our new GeForce RTX 30 Series GPUs, we’re unveiling NVIDIA Reflex, a revolutionary suite of GPU, G-SYNC display, and software technologies that measure and reduce system latency in competitive games (a.k.a. click-to-display latency). Reducing system latency is critical for competitive gamers, because it allows the PC and display to respond faster to a user’s mouse and keyboard inputs, enabling players to acquire enemies faster and take shots with greater precision.

NVIDIA Reflex features two major new technologies:

NVIDIA Reflex SDK: A new set of APIs for game developers to reduce and measure rendering latency. By integrating directly with the game, Reflex Low Latency Mode aligns game engine work to complete just-in-time for rendering, eliminating the GPU render queue and reducing CPU back pressure in GPU intensive scenes. This delivers latency reductions above and beyond existing driver-only techniques, such as NVIDIA Ultra Low Latency Mode.

“NVIDIA Reflex technology arms developers with new features to minimize latency in their games. We’re seeing excellent responsiveness and player control with Fortnite running on the GeForce RTX 30 Series.” - Nick Penwarden, VP of Engineering, Epic Games.

“Valorant was designed from the beginning to be a precise, competitive FPS, and that means doing everything we can to ensure crisp, responsive gameplay. With GeForce RTX 30 Series and NVIDIA Reflex technology, we're able to serve players around the world with the lowest render latency possible.” - Dave Heironymus, Director of Technology - Valorant / Riot Games

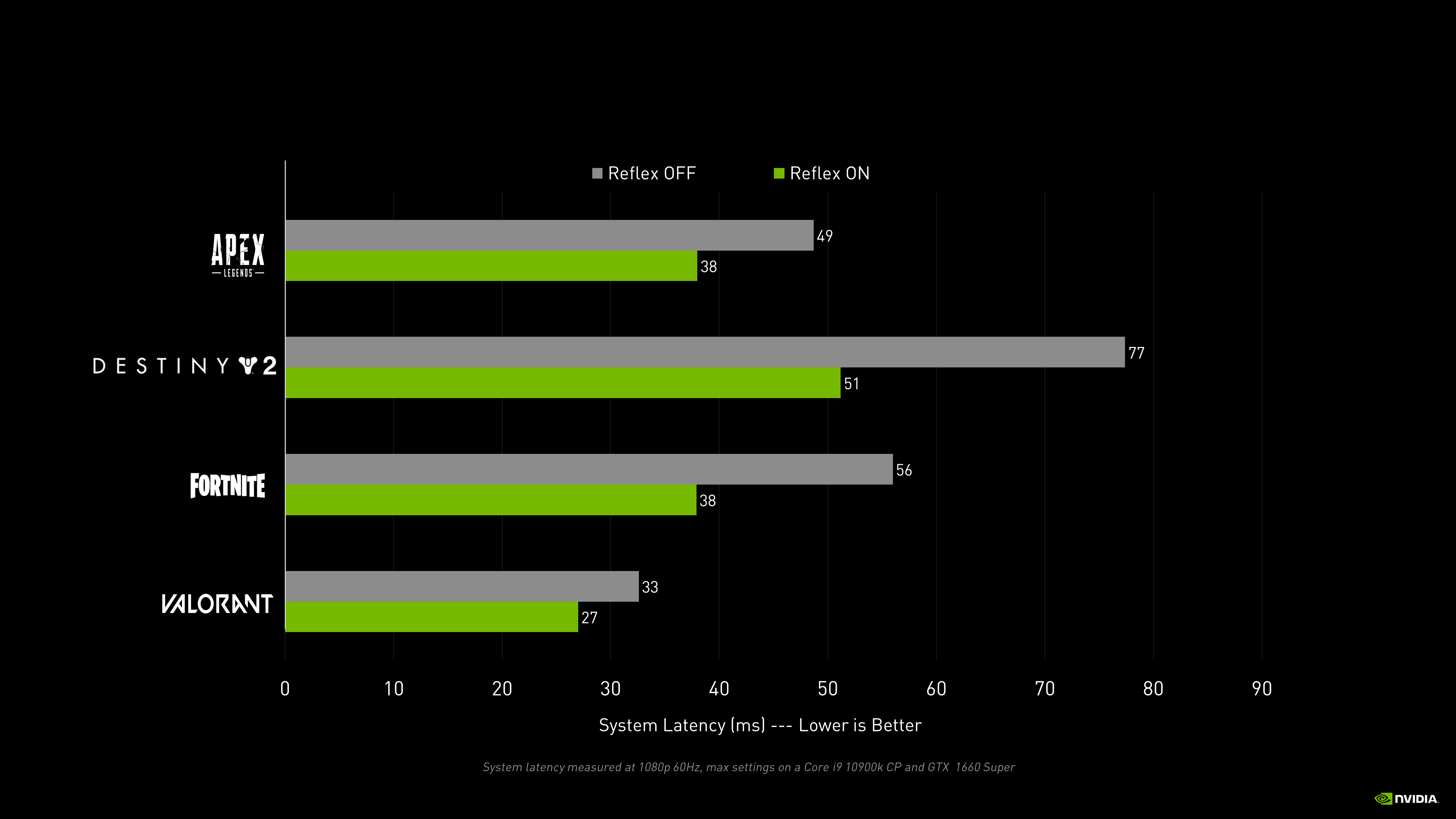

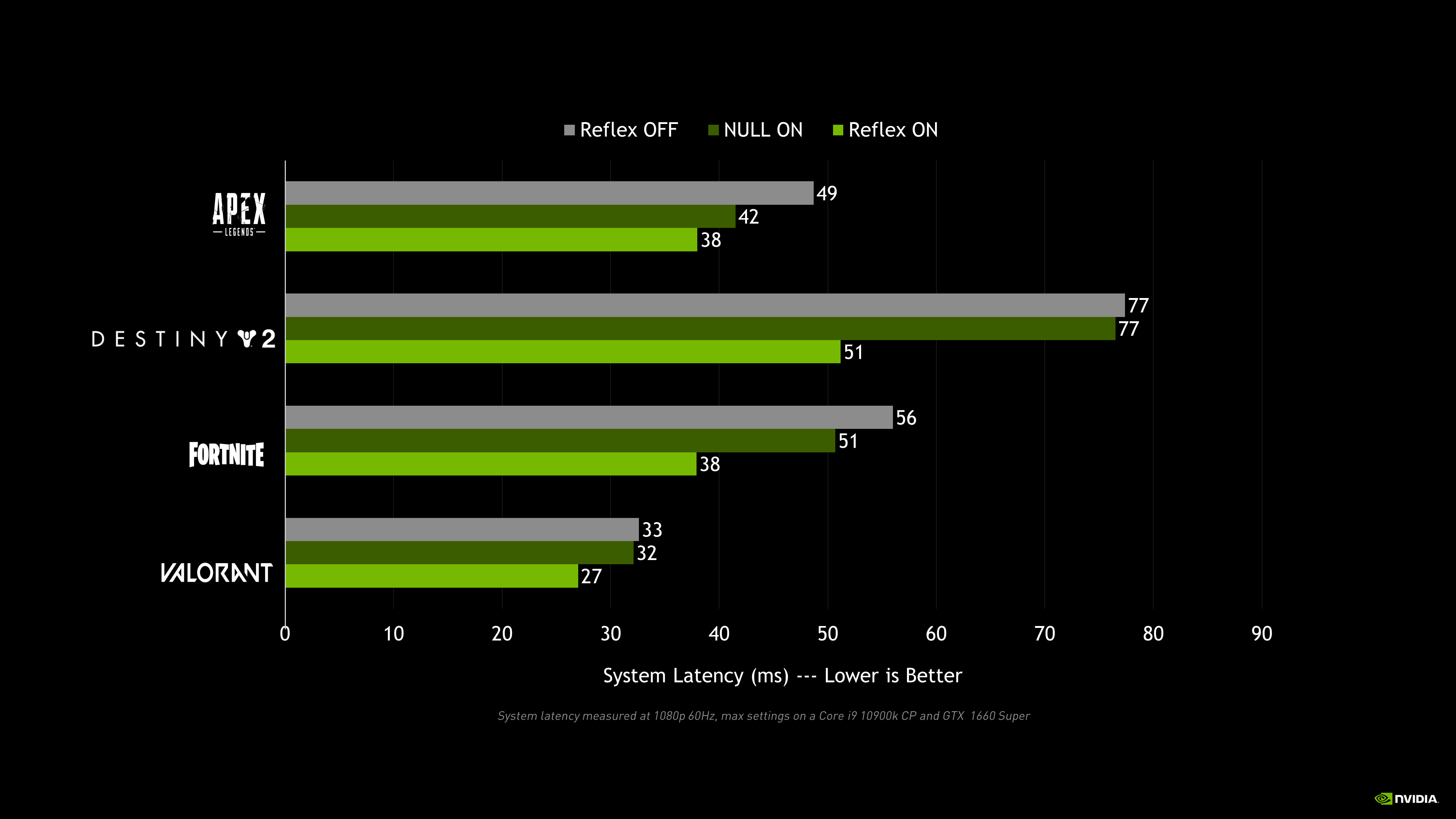

NVIDIA Reflex will deliver latency improvements in GPU-intensive gaming scenarios on GeForce GTX 900 and higher NVIDIA graphics cards in top competitive games, including Fortnite, Valorant, Apex Legends, Call of Duty: Black Ops Cold War, Call of Duty: Modern Warfare, Call of Duty: Warzone, and Destiny 2.

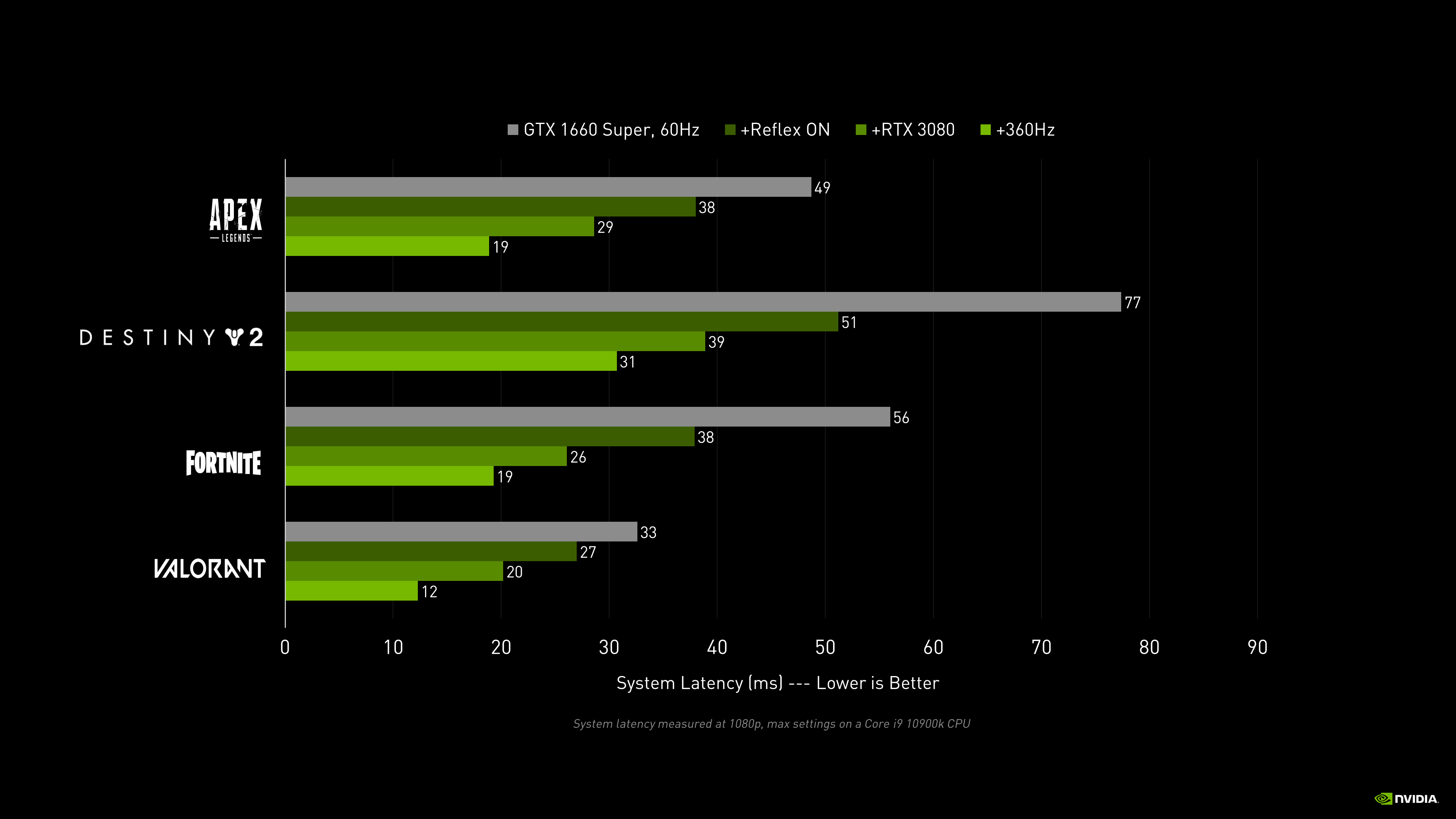

With popular mid-range cards, like the GeForce GTX 1660 Super, gamers can expect up to a 33% improvement in PC responsiveness with Reflex. Add in a GeForce RTX 3080 and a 360Hz G-SYNC esports display, and gamers can play like the pros with the very lowest latency possible.

NVIDIA Reflex Latency Analyzer: A revolutionary system latency measurement tool integrated into new 360Hz G-SYNC Esports displays from ACER, Alienware, ASUS, and MSI, and supported by top esports peripherals from ASUS, Logitech, Razer, and SteelSeries.

Reflex Latency Analyzer detects clicks coming from your mouse and then measures the time it takes for the resulting pixels (i.e. a gun muzzle flash) to change on screen. This type of measurement has been virtually impossible for gamers to do before now, requiring over $7000 in specialized high-speed cameras and equipment.

Whereas in the past gamers had to guess at their system’s responsiveness based on throughput metrics such as Frames Per Second (FPS), Reflex Latency Analyzer provides a much more complete and accurate understanding of mouse, PC, and display performance. Now with Reflex Latency Analyzer, competitive gamers can start a match with confidence, knowing their system is operating exactly as it should be.

In this article, we are going to provide a deep dive into system latency and NVIDIA Reflex technology -- so buckle up!

- So What Is Latency Anyway?

- End to End System Latency

- What’s The Difference Between FPS and System Latency?

- Why Does System Latency Matter?

- Reducing System Latency with NVIDIA Reflex

- Automatic Tuning in GeForce Experience

- Measuring System Latency with NVIDIA Reflex

- Training Your Aim with Lower Latency

- Wrapping Up

So What Is Latency Anyway?

Latency is a measure of time describing the delay between a desired action and the expected result. When we use a credit card to pay for something online or at the grocery store, the delay it takes for our purchase to be confirmed is latency.

Gamers primarily experience two kinds of latency: system latency and network latency.

Network latency is the round trip delay between the game client and the multiplayer server - more commonly known as “ping”.

This delay can affect our gameplay in a few different ways depending on how the game’s networking code handles network latency. Below are a few examples:

- Delayed hit confirmations, like when your shot hits, but you don’t get the kill confirmation until much later. This can result in wasted ammo or delayed aiming transfers to your next target.

- Delayed interactions with world objects, like opening doors or loot chests.

- Delayed positions of opponents, which results in what is known as “peeker’s advantage” (more about this later)

Note that network latency is not the same as network instability issues, such as packet loss and out of order packets. Network instability can cause problems like rubberbanding and desync. Rubberbanding is when you move around in the game, only to be reset back to your location from a few seconds ago. Like a rubber band, you elastically snap back to your position as seen by the server. Desync is when you have packet loss, resulting in network stutter. This will look like enemies pausing for a second then teleporting forward into their correct position. Both of these common problems are not network latency issues, but typically happen when the packet has further to travel and thus are correlated with higher latency.

System latency is the delay between your mouse or keyboard actions and the resulting pixel changes on the display, say from a gun muzzle flash or character movement. This is also referred to as click-to-display or end-to-end system latency. This latency doesn’t involve the game server - just your peripherals, PC, and display.

This delay impacts gameplay in a number of different ways. Here are a few examples:

- Delayed responsiveness, like when you move the mouse but your aim on screen lags behind.

- Delayed shots, like when you shoot, but the bullet hole decals, bullet tracers, and weapon recoil lag behind the actual mouse click.

- Delayed positions of opponents, known as “peeker’s advantage”. (yes, “peeker’s advantage” is also impacted by your system latency!)

At a high level, there are three main stages in system latency - the peripheral (such as a mouse), the PC, and the display. Unfortunately, this latency has been difficult to describe due to the use of the term “input latency” or “input lag” to describe different pieces of the system latency.

For example, you can find “Input Latency” on the box of a mouse when talking about how long the mouse takes to process your click. You can also find it on the box of a monitor talking about how long the display takes to process the frame. And you can find it mentioned in games and software tools when they are really talking about how long the game takes to process your inputs. If all are named “input latency”, which is it then?

End to End System Latency

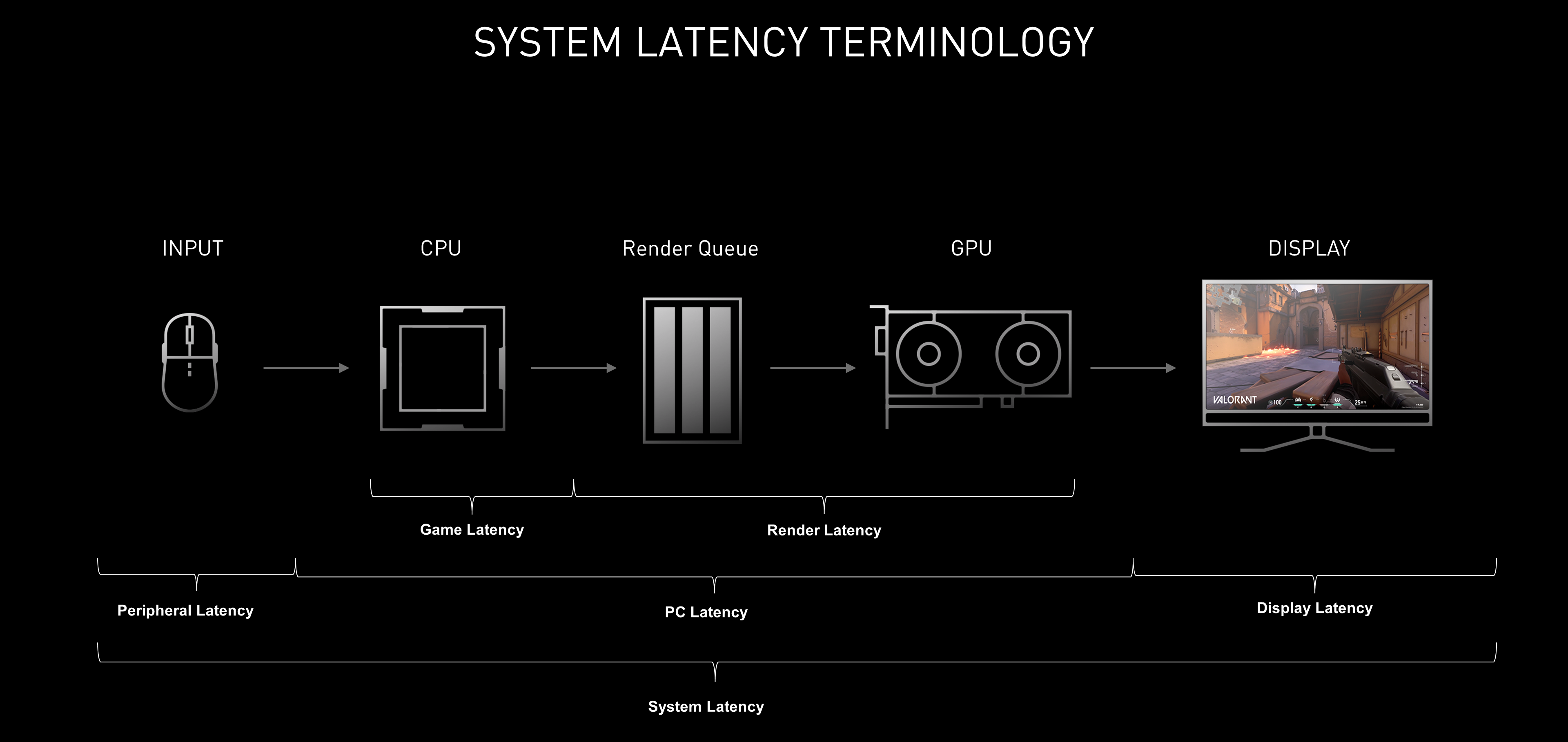

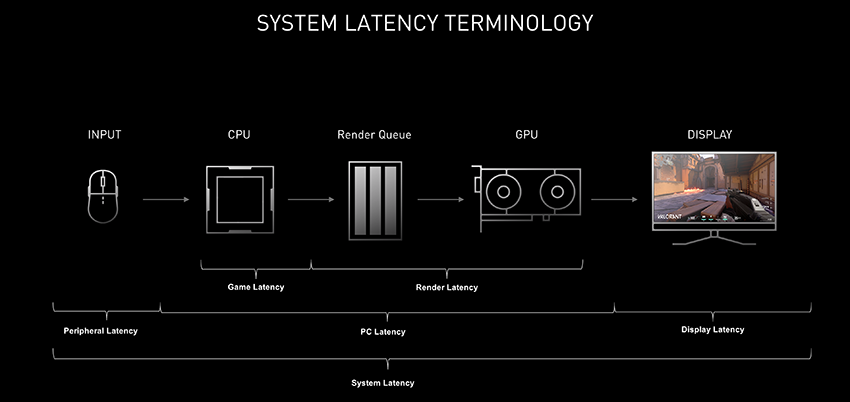

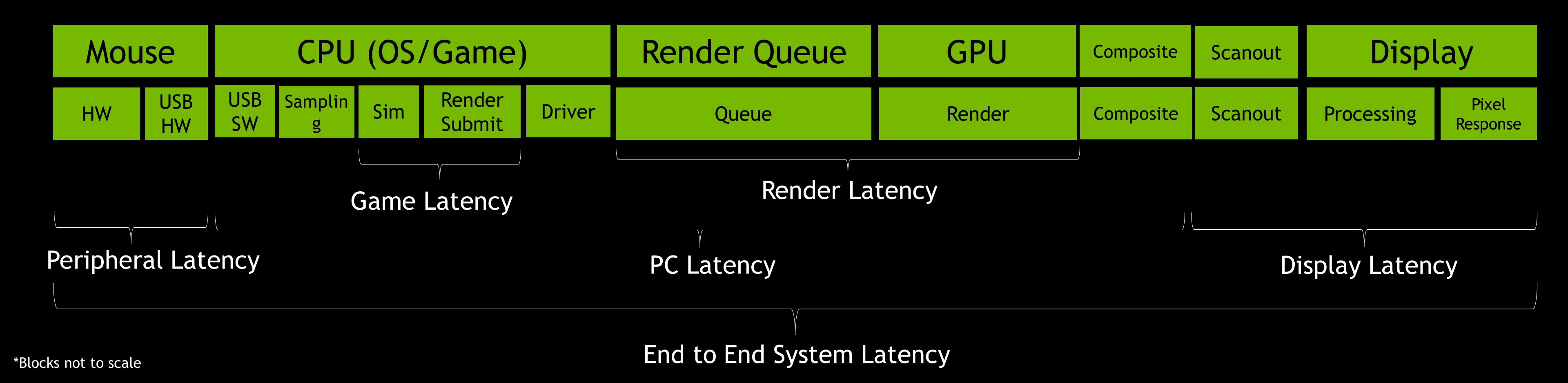

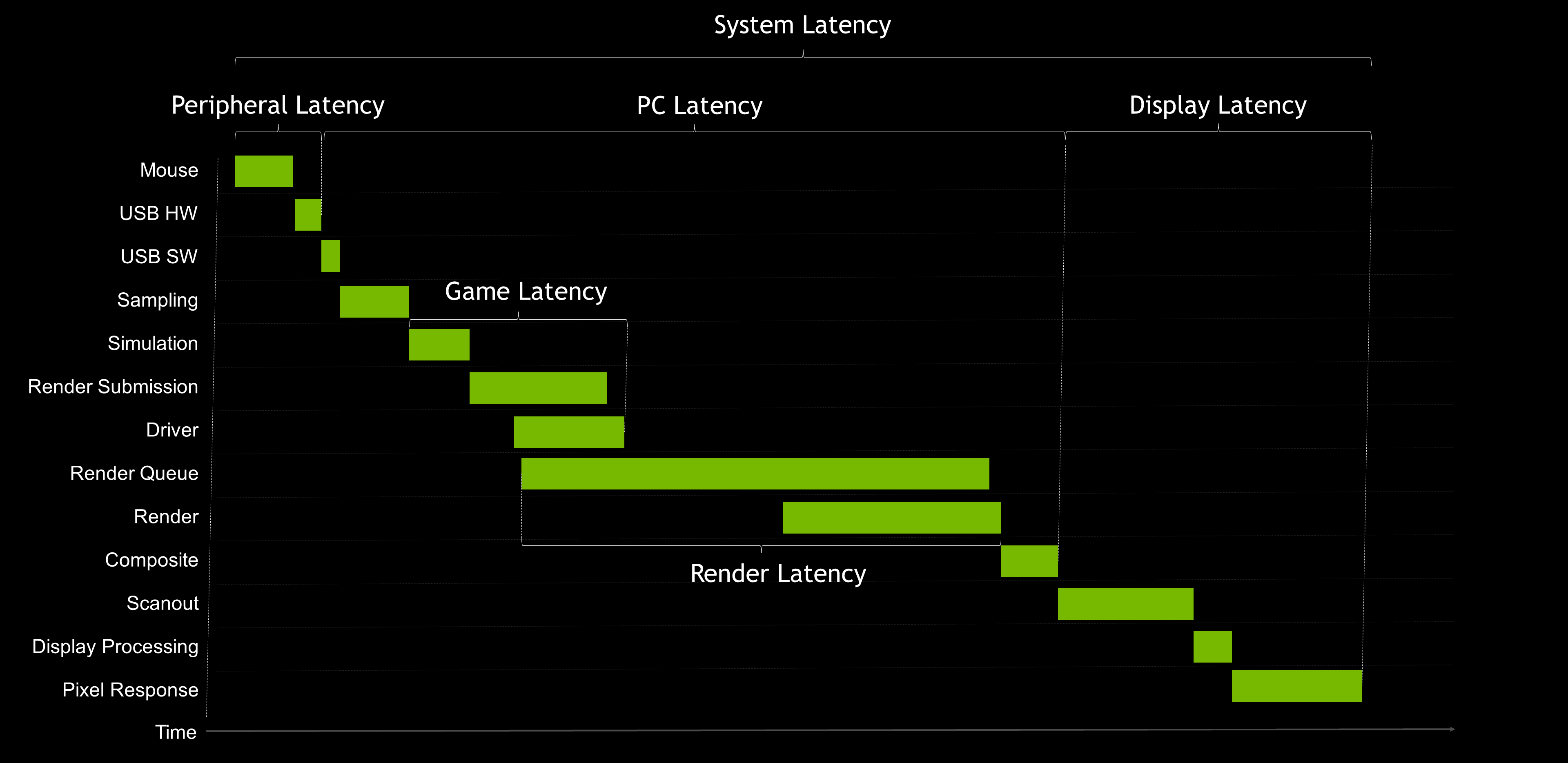

Let’s take a moment to dive one level deeper and define some terms that are more accurate than “Input lag”:

- Peripheral Latency: The time it takes your input device to process your mechanical input and send those input events to the PC

- Game Latency: The time it takes for the CPU to process input or changes to the world and submit a new frame to the GPU to be rendered

- Render Latency: The time from when the frame gets in line to be rendered to when the GPU completely renders the frame

- PC Latency: The time it takes a frame to travel through the PC. This includes both Game and Render Latency

- Display Latency: The time it takes for the display to present a new image after the GPU has finished rendering the frame

- System Latency: The time encompassing the whole end-to-end measurement - from the start of peripheral latency to the end of display latency

These are high level definitions that gloss over some of the details, though they do give us a great foundation to communicate about latency effectively. We will also go into more detail about each of the stages later in the article, so if you want to get more technical, skip ahead to the advanced section.

What’s The Difference Between FPS and System Latency?

In general, higher FPS correlates with lower system latency - however this relationship is far from 1-to-1. To better understand, let’s step back and think about how we can measure our interactions with our PC. First, there is the number of pictures our display can present to us per second. That number is a throughput rate called FPS (Frames Per Second). The second way is the time it takes for our actions to be reflected in one of those pictures -- a duration called System Latency.

If we have a PC that can render 1000 FPS, but it takes one second for our inputs to reach the display, that would be a poor experience. Conversely, if our actions are instantaneous but our framerate is 5 FPS, that won't be a great experience either.

So which one matters more? More than a year ago, we set out to answer this question and the findings are quite interesting. We published our full research at SIGGRAPH Asia, but in short, we found that system latency impacted subjects' ability to complete aiming tasks in an aim trainer much more than the rate of frames displayed on their monitor. But why is that?

Why Does System Latency Matter?

Let’s start to answer this question by looking at examples in real games:

First, let’s take a look at hit registration. Hit registration is a term gamers use when talking about how well the game registers their shots onto another player. We often blame hit registry when we KNOW we hit that shot. We have all been there. But is it really hit registration?

In this shot above, the mouse button was pressed when the crosshair was over the target, but we still missed. Due to system latency and the movement of the opponent, the game engine read that the position of your crosshair was actually behind the target. In fact, what you see on your display is behind the current state of the game engine. This is simply because it takes a while for the PC to process the information, render the frame, and present it to the display. In games where milliseconds matter, 30-40 extra ms of delay can mean missing that game winning frag.

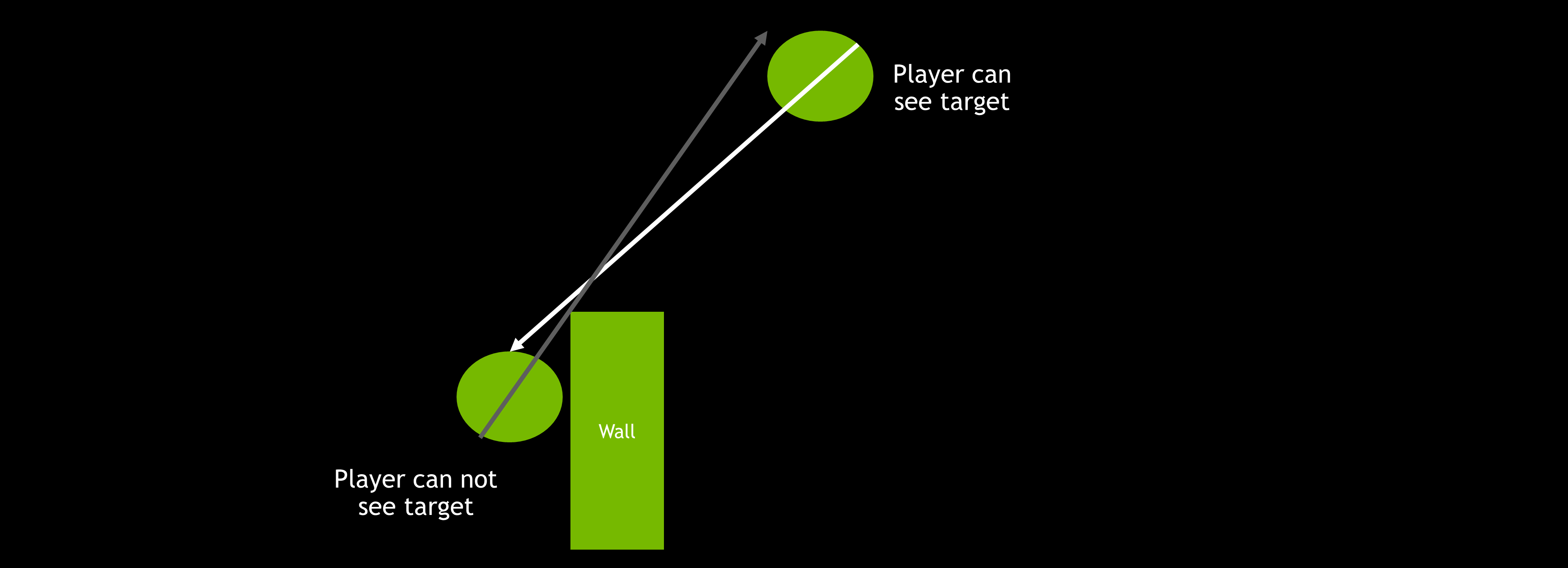

Second, let’s cover “peeker's advantage”. At high levels of competitive play, you typically hold an angle when you have an extreme angle advantage (when you are further away from the corner than your opponent) to offset a characteristic of online games called “peeker’s advantage”.

Peeker’s advantage is the split second advantage the attacker gets when peeking around a corner at a player holding an angle. Because it takes time for the attacking player’s positional information to reach the defender over the network, the attacking player has an inherent advantage. To offset this, players will ‘jiggle peek’ the corner; quickly peeking and ducking back into cover, as it will allow them to see an enemy before they are seen, providing a split second advantage. This phenomena is often seen as a characteristic of the game networking code or network latency. However, system latency can play a large role in peeker’s advantage.

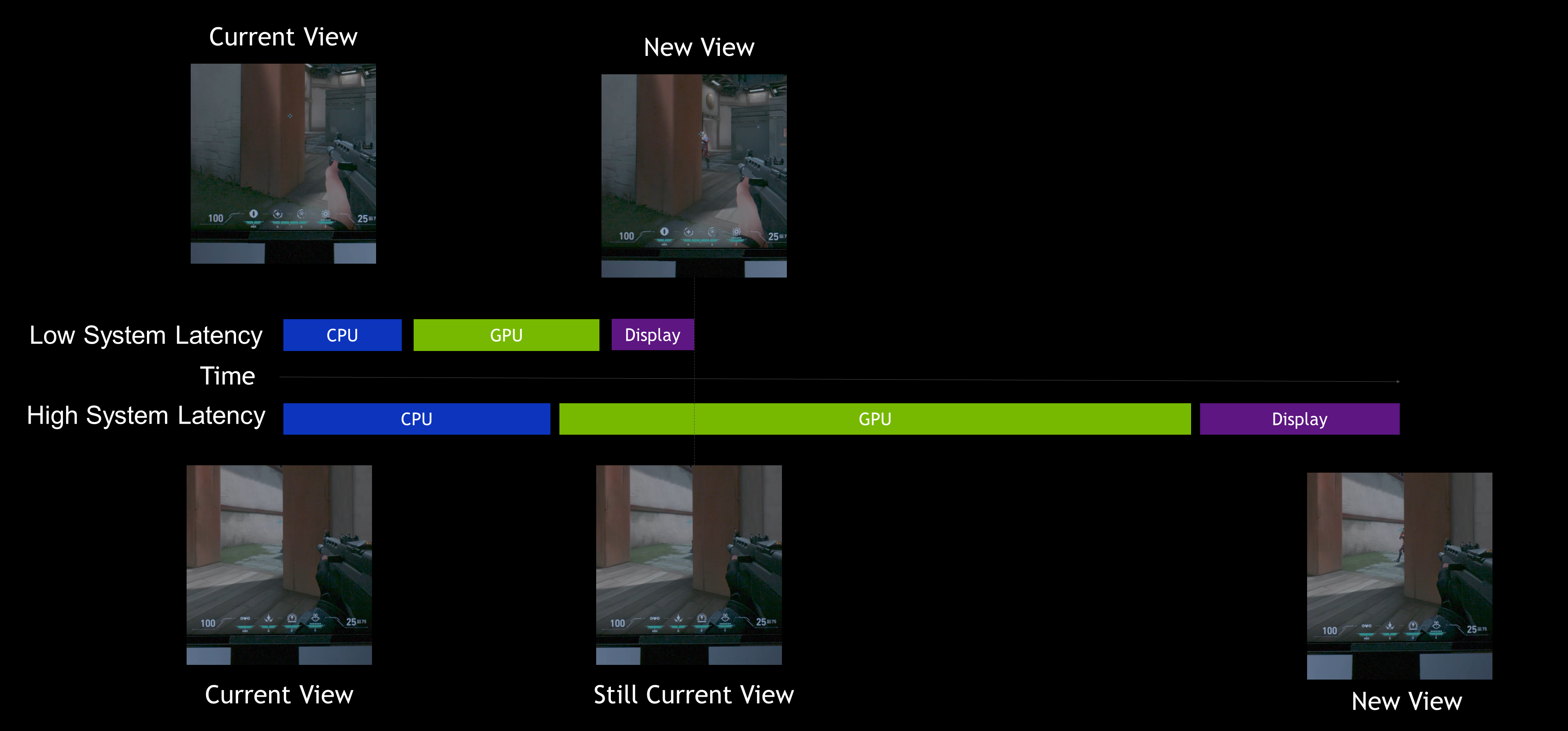

As you can see in the shot above, both players were equally distanced from the angle, and their pings were the same. The only difference was their system latencies.

Similar to the hit registration explanation, at higher system latency your view of the world is delayed - allowing for the target to see you before you see the target. If your system latency is much lower than your opponent, you can potentially mitigate peeker’s advantage completely. There are still the effects of the game networking at play here, but in general, lower system latency helps to both mitigate peeker’s advantage on defense and take advantage on attack.

Finally, let’s discuss aiming accuracy. In particular, flick shots. Drilling flicks is probably the most important training you can do for competitive games like CS:GO or Valorant. In a split second you must acquire your target, flick to it, and click with incredible precision that requires millisecond accuracy. But do you ever feel like no matter what you do, your flicks aren’t consistent?

Aiming involves a series of sub-movements - subconscious corrections based on the current position of the crosshair relative to the target’s location. At higher latencies, this feedback loop time is increased resulting in less precision. Additionally, at higher average latencies, the latency varies more, meaning that it’s harder for your body to predict and adapt to. The end result is pretty clear - high latency means less precision.

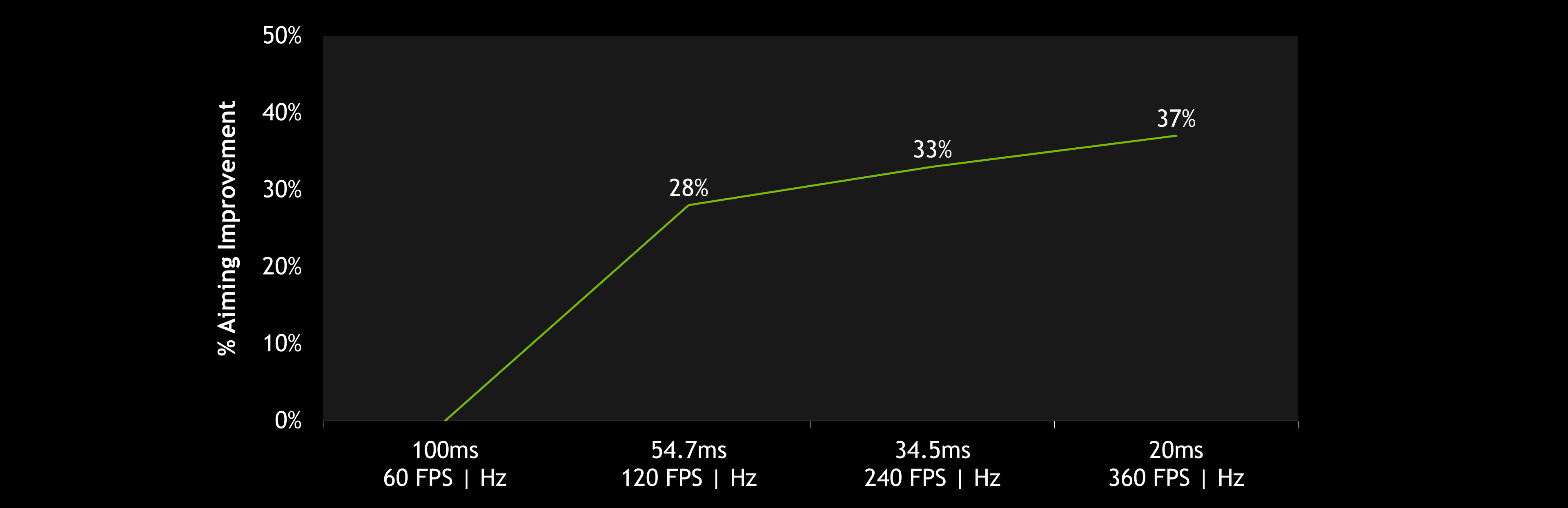

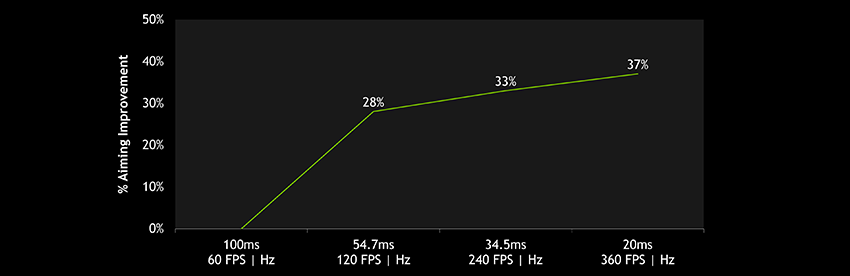

And that brings us to the results of our study we mentioned earlier. In the chart below, you can see how lower latency had a large impact when measuring flick shot accuracy.

In competitive games, higher FPS and refresh rates (Hz) reduce your latency, delivering more opportunities for your inputs to end up on screen. Even small reductions in latency have an impact on flicking performance. In our latest Esports Research blog, the NVIDIA Research team explored the ways in which different levels of system latency affect player performance.

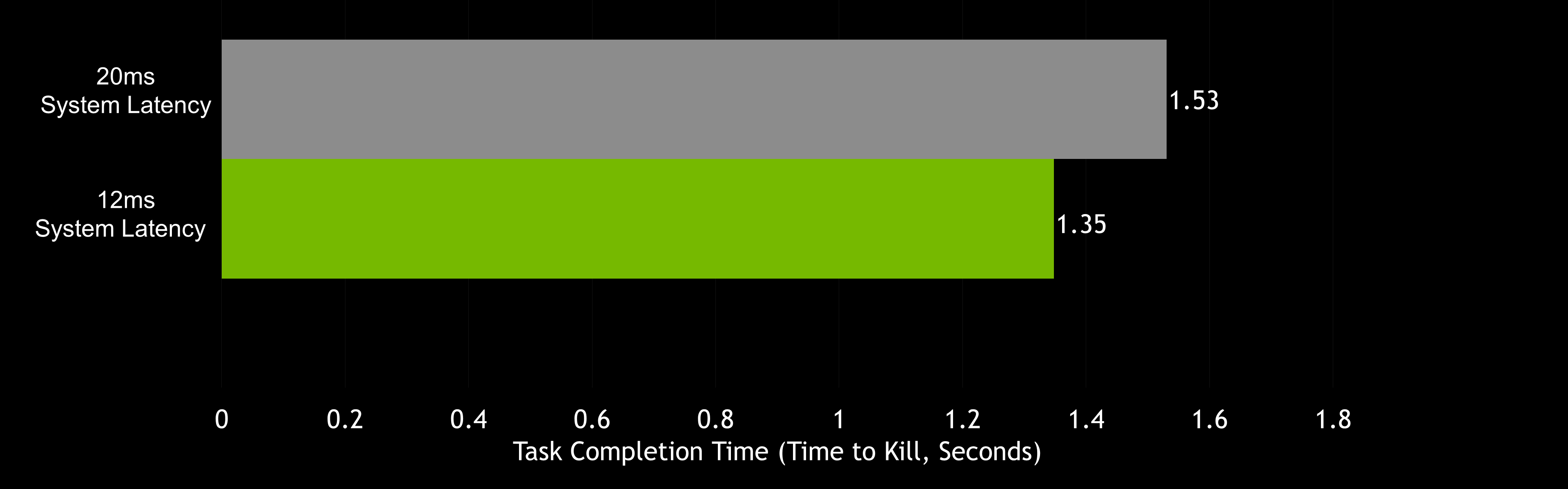

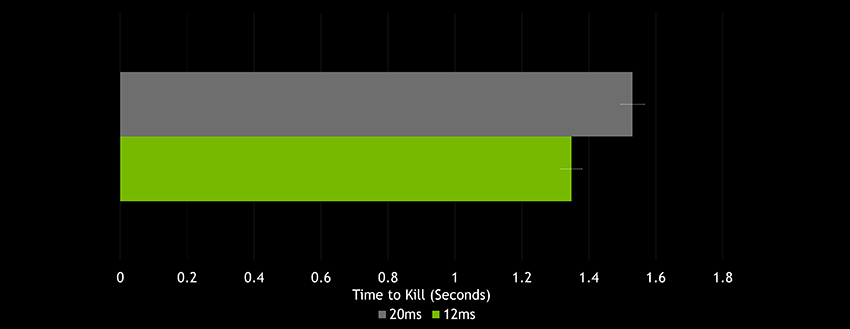

NVIDIA Research has found that even minor differences in system latency -- 12ms vs. 20ms, can have a significant difference in aiming performance. In fact, the average difference in aiming task completion (the time it takes to acquire and shoot a target) between a 12ms and 20ms PCs was measured to be 182ms - that is about 22 times the system latency difference. To put that into perspective, given the same target difficulty, in a 128 tick Valorant or CS:GO server, your shots will land on target an average of 23 ticks earlier on the 12ms PC setup. Yet most gamers play on systems with 50-100ms of system latency!

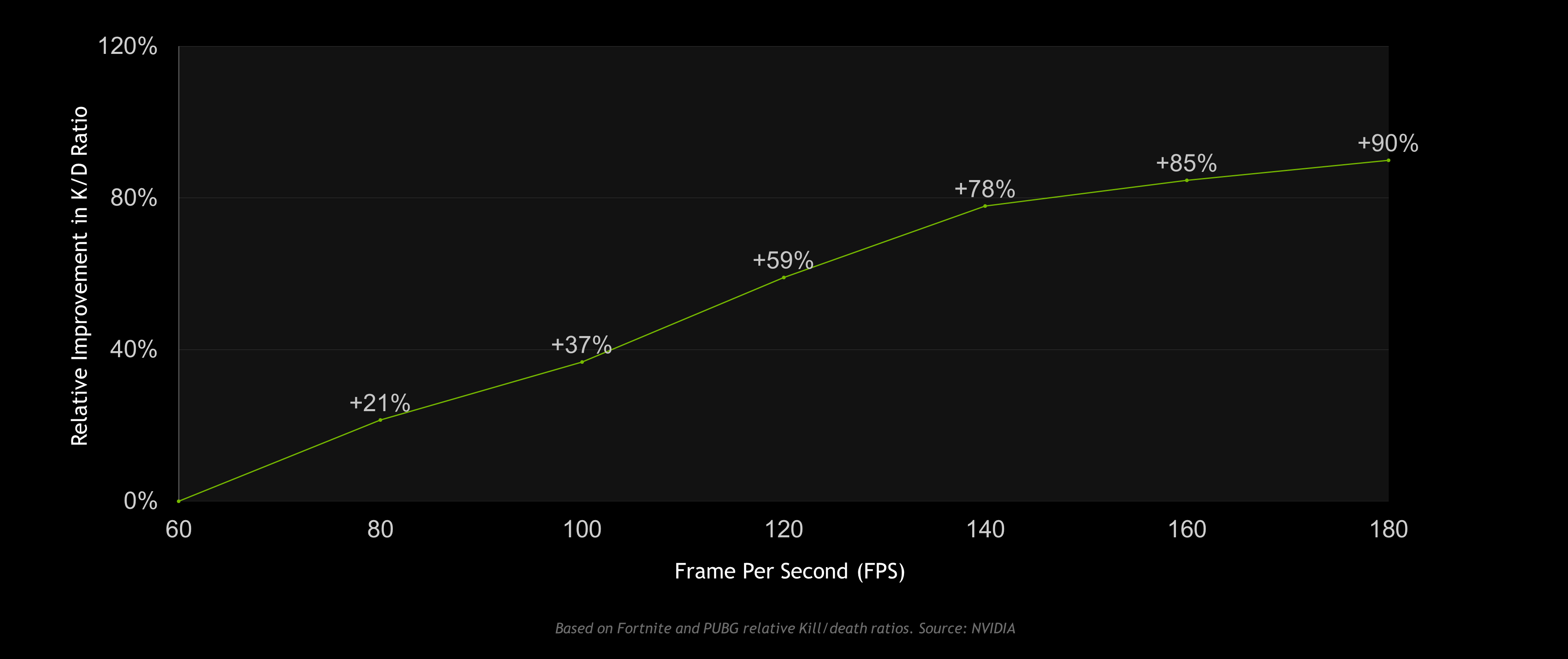

So does this translate into actually being more successful in games? Being good at competitive shooters involves much more than just mechanical skill. A keen game sense and battle-hardened strategy can go a long way towards getting a chicken dinner or clutching the round. However, looking at our PUBG and Fortnite data, we see a similar correlation between higher FPS (lower latency) and K/D (kill to death) ratios.

By no means does correlation mean causation. But applying the above science to this correlation, we see a lot of evidence to support the claim that higher FPS and lower system latency lead to landing shots more frequently - boosting K/D ratios.

Reducing System Latency with NVIDIA Reflex

With the launch of NVIDIA Reflex, we set out to optimize every aspect of the rendering pipeline for latency using a combination of SDKs and driver optimizations. Some of these techniques can result in large latency savings, while others will have more modest benefits, depending on the situation. Regardless, NVIDIA Reflex is our commitment to delivering gamers and developers tools to optimize for system latency.

NVIDIA Reflex SDK

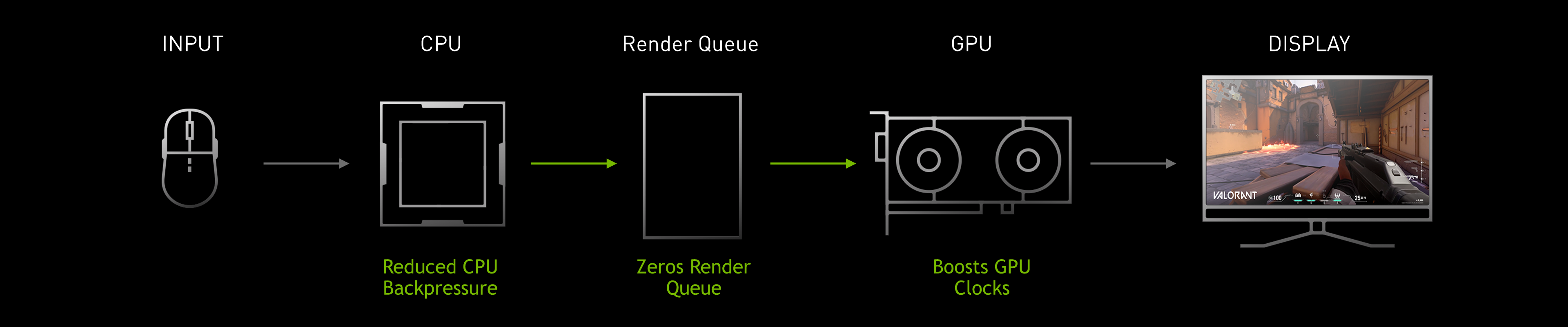

The Reflex SDK allows game developers to implement a low latency mode that aligns game engine work to complete just-in-time for rendering, eliminating the GPU render queue and reducing CPU back pressure in GPU-bound scenarios.

In the above image, we can see that the queue is filled with frames. The CPU is processing frames faster than the GPU can render them causing this backup, resulting in an increase of render latency. The Reflex SDK shares some similarities with the Ultra Low Latency Mode in the driver; however, by integrating directly into the game, we are able to control the amount of back-pressure the CPU receives from the render queue and other later stages of the pipeline. While the Ultra Low Latency mode can often reduce the render queue, it can not remove the increased back-pressure on the game and CPU side. Thus, the latency benefits from the Reflex SDK are generally much better than the Ultra Low Latency mode in the driver.

When developers integrate the Reflex SDK, they are able to effectively delay the sampling of input and game simulation by dynamically adjusting the submission timing of rendering work to the GPU so that they are processed just-in-time.

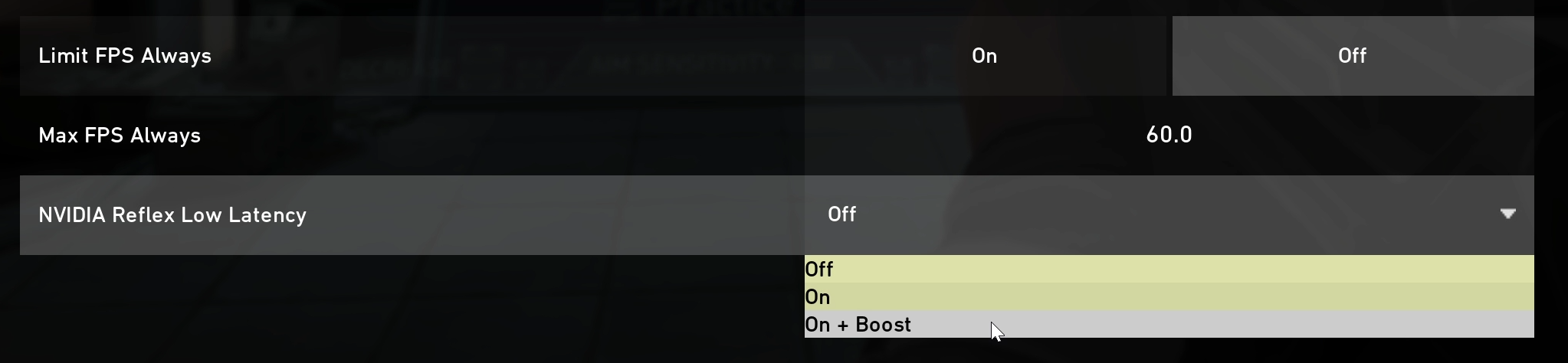

Additionally, the SDK also offers a feature called Low Latency Boost. This feature overrides the power saving features in the GPU to allow the GPU clocks to stay high when heavily CPU-bound. Even when the game is CPU-bound, longer rendering times add latency. Keeping the clocks higher can consume significantly more power, but can reduce latency slightly when the GPU is significantly underutilized and the CPU submits the final rendering work in a large batch. Note that if you do not want the power tradeoff, you can use Reflex Low Latency mode without the Boost enabled.

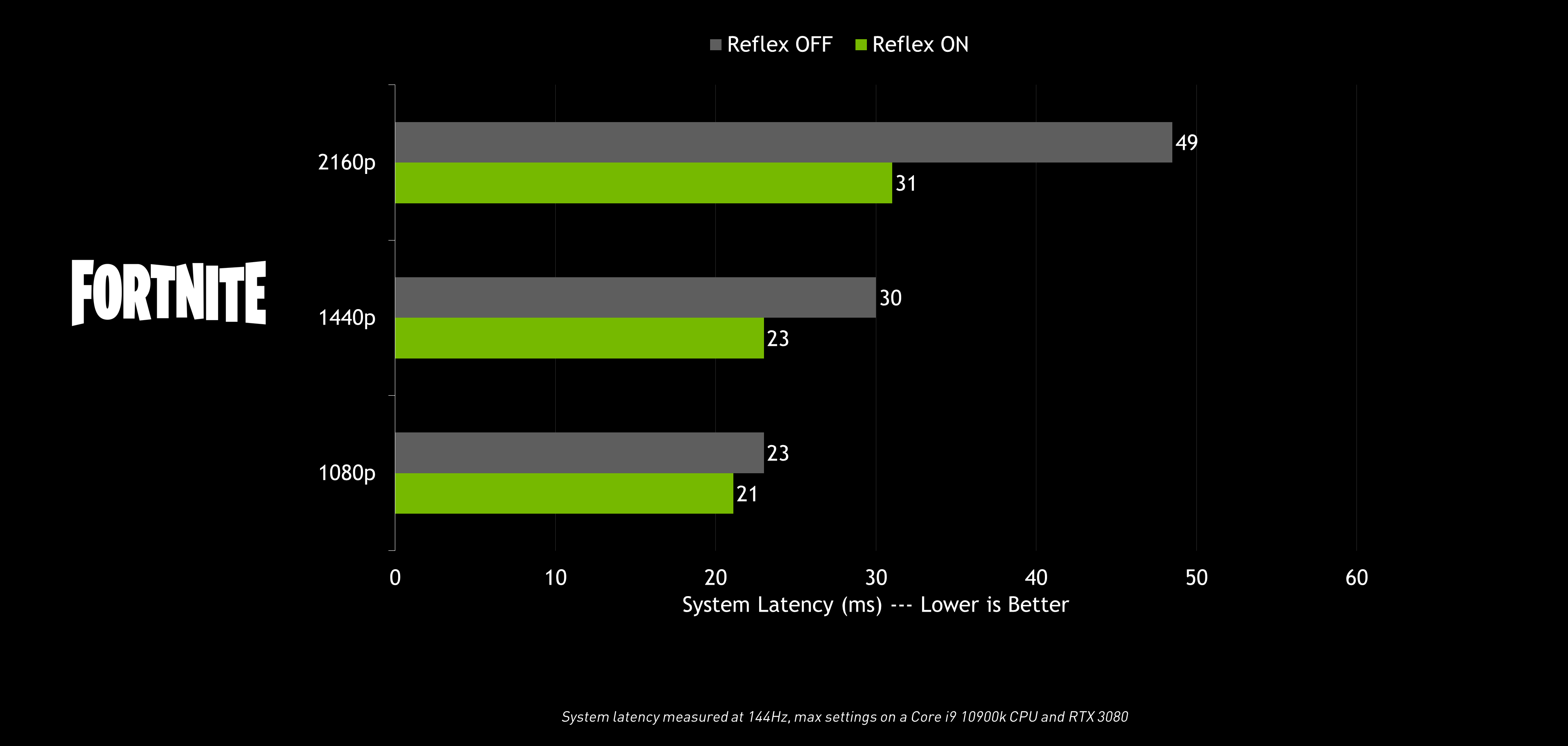

Competitive gamers generally avoid higher resolutions when playing first person shooters due to the increased rendering load and latency. However, with NVIDIA Reflex, you can get lower latency at higher resolutions - enabling competitive play for gamers who enjoy great image quality and a responsive experience.

Competitive shooters are dynamic - changing back and forth between GPU and CPU boundedness. If there is an explosion with lots of particles and the game becomes GPU-bound, the Reflex SDK will keep the latency low by not letting the work for the GPU queue up. If the rendering is simple and the game is CPU bound, the Reflex SDK will keep latency low by maintaining high GPU clock frequencies. Regardless of the state of the rendering pipeline, the Reflex SDK intelligently reduces render latency for the given configuration. With the Reflex SDK, gamers can stay in the rendering latency sweet spot without having to turn all their settings to low.

At the time of the Reflex SDK announcement, the following games plan to support NVIDIA Reflex with our next Game Ready Driver on September 17th, 2020: Fortnite, and Valorant. Additionally, the following games have announced support for NVIDIA Reflex coming soon: Apex Legends, Call of Duty: Black Ops Cold War, Call of Duty: Warzone, Cuisine Royale, Destiny 2, Enlisted, Kovaak 2.0, and Mordhau.

The NVIDIA Reflex SDK supports GPUs all the way back to 2014’s GeForce GTX 900 Series products. The Low Latency Boost mode is supported on all GPUs, however, GeForce RTX 30 Series GPUs will maintain a slightly higher clock rate to further reduce latency.

For those of you who really want to dig into how the SDK works, we will cover the rendering pipeline, CPU/GPU-boundedness, and how latency is reduced in more detail in the advanced section.

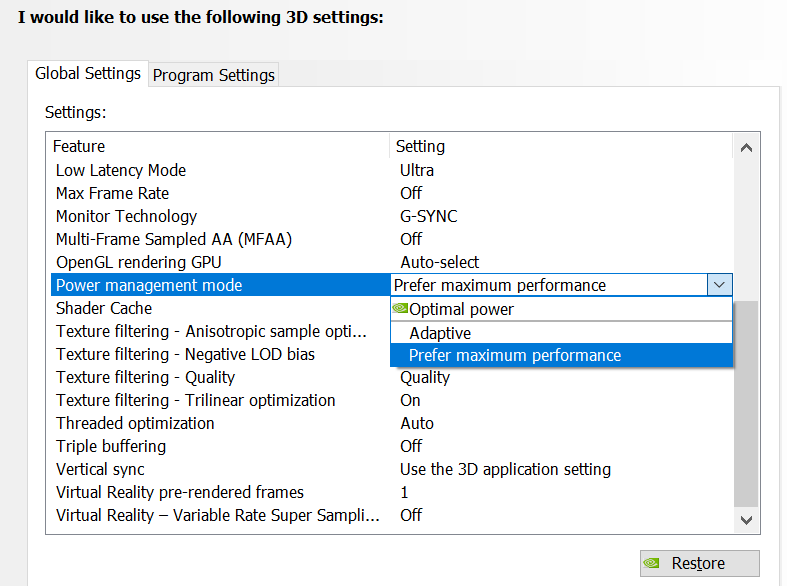

Ultra Low Latency Mode

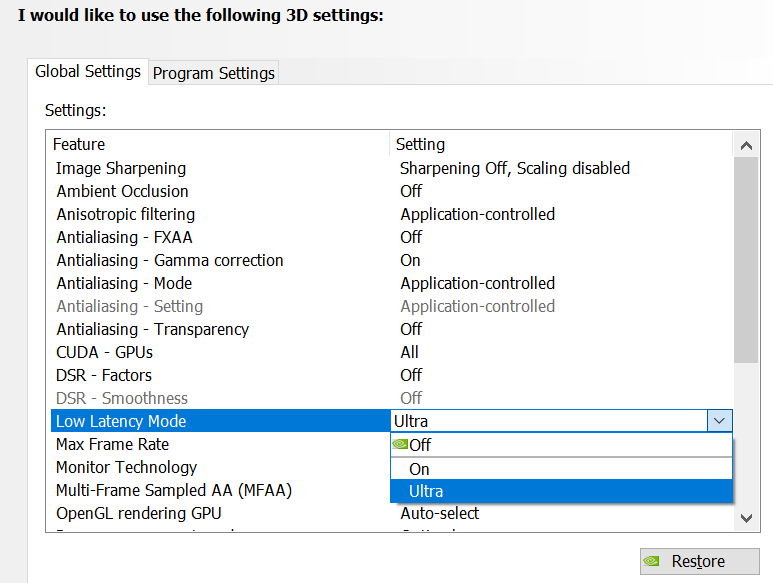

If a game doesn't support the Reflex SDK, you can still get partial latency improvements by enabling NVIDIA Ultra Low Latency mode from the NVIDIA Control Panel. Simply open the control panel and navigate to Manage 3D Settings, then Low Latency Mode, and select the Ultra option. As mentioned earlier in the article, this will help reduce the rendering latency, but without full control of the pipeline.

If a game supports the NVIDIA Reflex Low Latency mode, we recommend using that mode over the Ultra Low Latency mode in the driver. However, if you leave both on, the Reflex Low Latency mode will take higher priority automatically for you.

Prefer Maximum Performance

The NVIDIA graphics driver has long shipped with an option called “Power Management Mode”. This option allows gamers to choose how the GPU operates in CPU-bound scenarios. When the GPU is saturated with work, it will always run at maximum performance. However, when the GPU is not saturated with work there is an opportunity to save power by reducing GPU clocks while maintaining FPS.

Similar to the Low Latency Boost feature in the Reflex SDK, the Prefer Maximum Performance mode overrides the power savings features in the GPU and allows the GPU to always run at higher clocks. These higher clocks can reduce latency in CPU-bound instances at a tradeoff of higher power consumption. This mode is designed for gamers who want to squeeze every last microsecond of latency out of the pipeline regardless of power.

With GeForce RTX 30 Series GPUs, we are able to set this clock value higher than before, allowing the GPU to target the absolute lowest render latency possible when CPU-bound. Users with older GPUs can still turn on Prefer Maximum Performance and hold clocks to base frequencies.

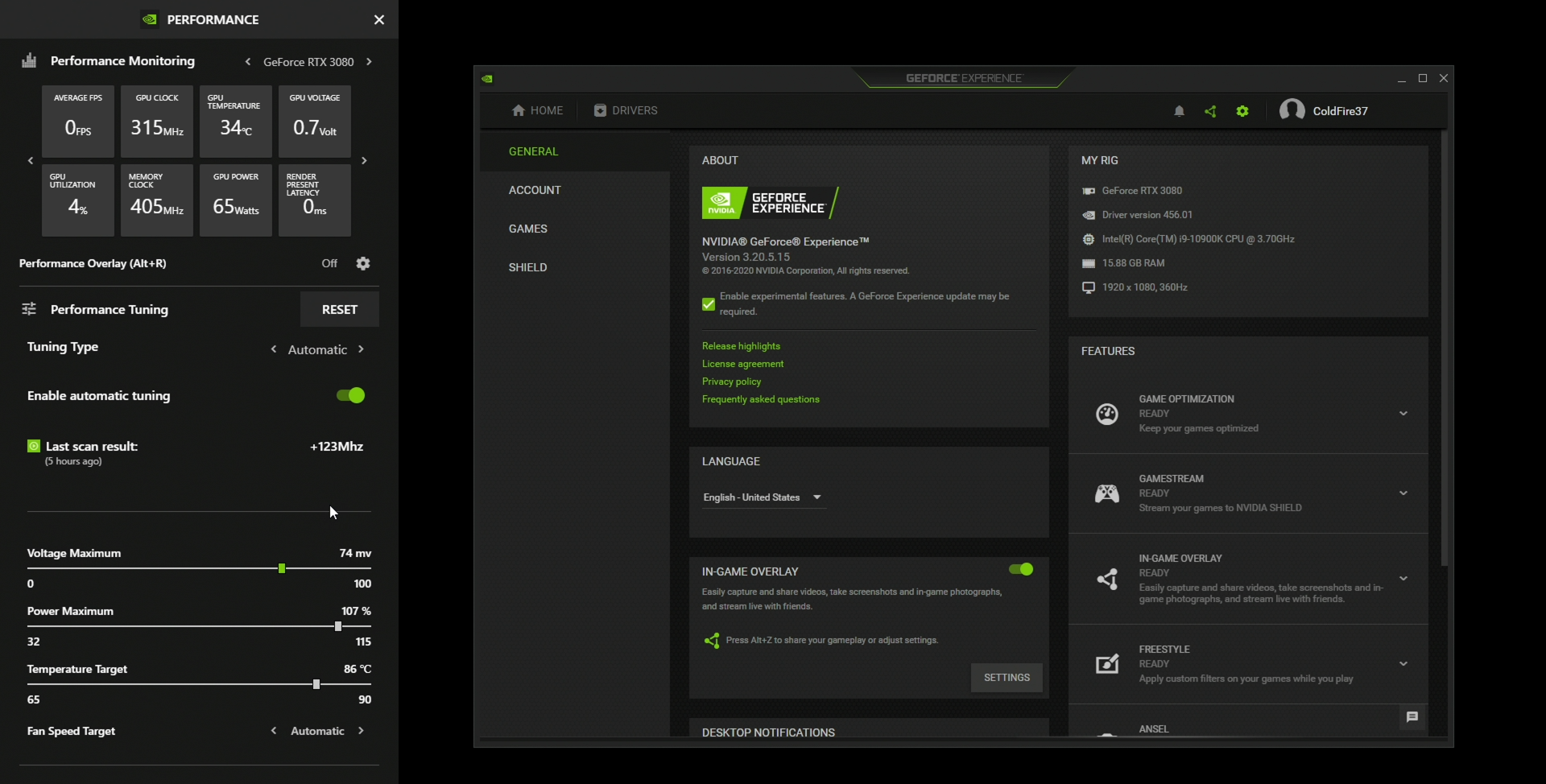

Automatic Tuning in GeForce Experience

With the release of a new GeForce Experience update coming in September, there’ll be a new beta feature in the in-game overlay performance panel that allows gamers to tune their GPU for lower render latency with a single click.

This advanced automatic tuner scans your GPU for the maximum frequency bump at each voltage point on the curve. Once it has found and applied the perfect settings for your GPU, it also retests and maintains your tuning over time - keeping your tune stable.

Stay tuned to GeForce.com for further details and a how-to for this exciting new feature.

Measuring System Latency with NVIDIA Reflex

One of the main reasons why system latency hasn’t been widely talked about until now is that it’s been incredibly difficult to accurately measure. In order to measure latency, your measurement device has to be able to accurately know the start and end times of the measurement.

Traditionally, measuring system latency has only been accomplished with expensive and cumbersome high speed cameras, engineering equipment, and a modified mouse and LED to track when the mouse button has been pressed. With a 1000 FPS high speed camera, you can measure a minimum of 1ms of latency. However, a setup like this starts at about $7,000 USD for the bare minimum equipment. Even then, once you have the setup, it takes roughly 3 minutes for each measurement… pretty much a non-starter for 99.9% of gamers.

NVIDIA Reflex Latency Analyzer

Compatible 360Hz G-SYNC displays launching this Fall come enabled with a new feature - NVIDIA Reflex Latency Analyzer. This revolutionary addition enables gamers to measure their system responsiveness, allowing them to fully understand and tweak their PC’s performance before starting a match.

To access this capability, simply plug your mouse into the designated Reflex Latency Analyzer USB port on a 360Hz G-SYNC display. The display's Reflex USB port is a simple passthrough to the PC that watches for mouse clicks without adding any latency.

Reflex Latency Analyzer works by detecting the clicks coming from your mouse and measuring the time it takes for a resulting display pixel change (i.e. gun fire) to happen on the screen, providing you with a full System Latency measurement.

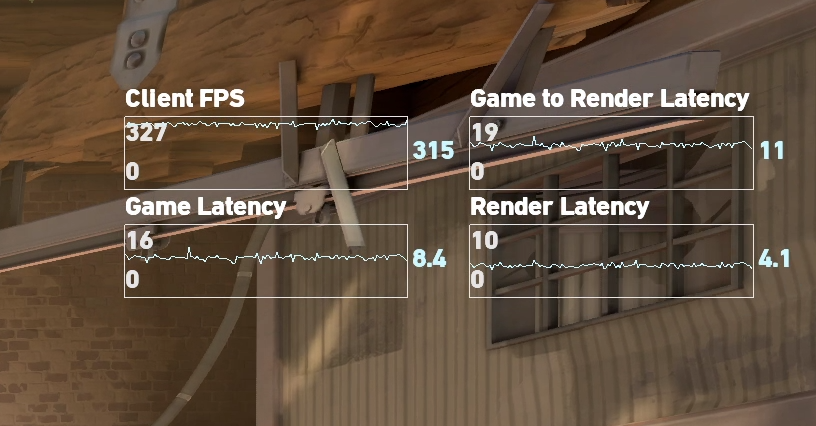

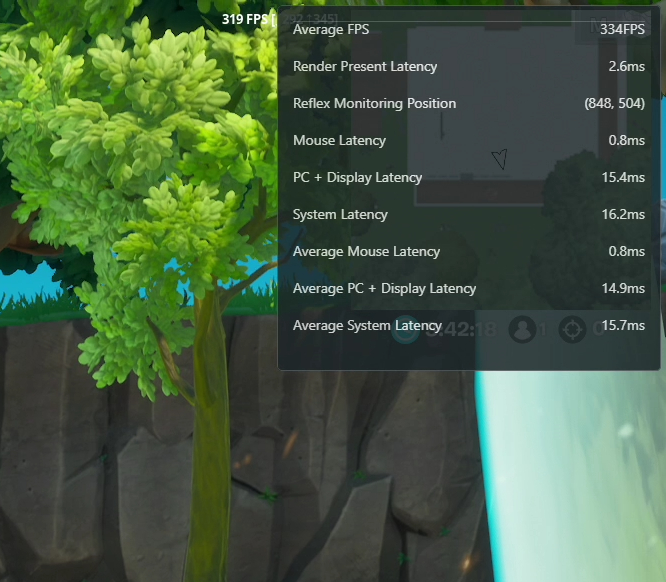

GeForce Experience’s new Performance Overlay reports the latency metrics in real-time. To view the latency metrics, navigate to the “Performance Overlay” options, and enable the “Latency Metrics” setting, when released in September.

NVIDIA Reflex Latency Analyzer breaks the system latency measurement up into Mouse Latency, PC + Display Latency, and System Latency.

You can use any mouse with Reflex Latency Analyzer to get PC + Display Latency (with the exception of Bluetooth mice). However, with a compatible mouse, you will also be able to measure peripheral latency and get full end-to-end system latency.

Additionally, we will also be releasing an open database of average mouse latencies that can be referenced if GeForce Experience recognizes your mouse. In the future, the community will be able to add mice to the database. More on this in the future.

At the time of writing, there are four mouse partners that have announced support for NVIDIA Reflex Latency Analyzer: ASUS, Logitech, Razer, and SteelSeries. Keep an eye on their websites and social media pages for announcements about NVIDIA Reflex Latency Analyzer compatibility. Also, starting this Fall, look out for 360Hz G-SYNC esports displays from ACER, Alienware, ASUS and MSI with built-in NVIDIA Reflex Latency Analyzer technology.

NVIDIA Reflex Software Metrics

If you are eager to start measuring latency, you can do so before you get your hands on a new 360Hz display. Any game that integrates the NVIDIA Reflex SDK also has the ability to add both Game Latency and Render Latency metrics to their in game stats. This measurement is not the full latency you feel, but can get you started on your path to latency optimization.

Additionally, GeForce Experience now features a performance overlay that lets you track render present latency in any game. Render present latency tracks the present call through the render queue and GPU rendering. Since it’s the final call of a frame, the magnitude of render present latency will be slightly smaller than render latency measured with the NVIDIA Reflex SDK, but should still give you a good idea of what your rendering latency is. We will be adding render latency to GeForce Experience in a future update.

All you need to do is update to the latest versions of the GeForce Game Ready Driver and GeForce Experience later this month when the feature is released, select the “Performance” menu, choose the “Latency Metrics” set, and enable the “Performance overlay”.

Training Your Aim with Lower Latency

In addition to latency measurement tools, we’ve partnered with The Meta, the developers of KovaaK 2.0, to introduce a new NVIDIA Experiments mode in a future client update, which will help gamers improve their performance and hone their skills.

You can enter into the NVIDIA Experiments mode from either the sandbox or the trainer. Once you are in the NVIDIA Experiments mode, choose an experiment that interests you. Additionally, we have integrated the NVIDIA Reflex SDK into KovaaK 2.0, in addition to a few other technologies that can help gamers feel the difference between high and low system latency.

Taking part in experiments not only helps improve your aim, but also contributes to important esports research. Our partnership with The Meta on KovaaK 2.0 allows us to test and bust myths in the competitive gaming space. For example, one of our first experiments looks to add some science behind the preference in target color choice based on debates competitive gamers have around target outline colors in Valorant.

Other experiments will test things like different ranges of latency while giving you challenging tasks to complete. Check out The Meta’s KovaaK 2.0 on Steam today, and stay tuned for the release of the NVIDIA Experiments mode.

Next Level: System Latency - Expert Mode

Alright, let’s pop the hood and take a look at how this all works at the next level of detail. This section will cover how your mouse clicks actually get to the pixels on your screen, the concept of the game and rendering pipeline, the impact CPU and GPU-boundness has on latency, overlap within the rendering pipeline, and finally some tools to help visualize what is happening on your system.

Breaking Down How Your Actions Get To The Display

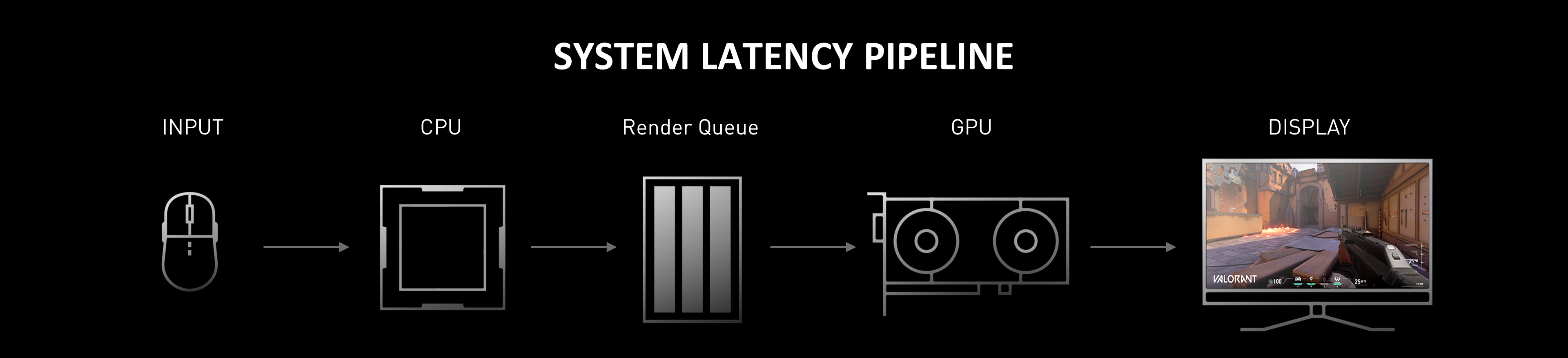

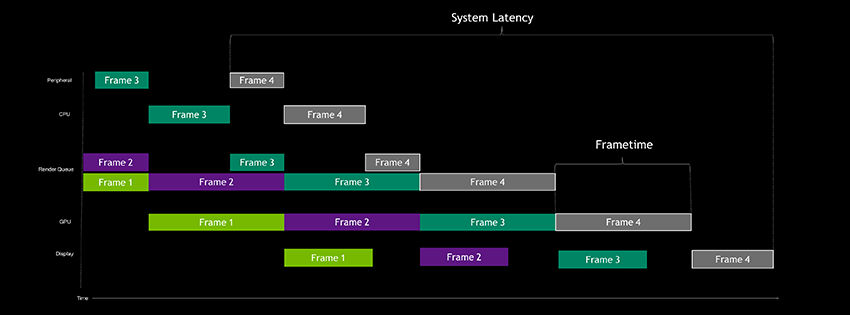

So how do your clicks really get to the display? The chart below breaks down the stages of the pipeline. Note that while there is overlap between these stages, they do need to start and finish in left-to-right order.

Okay, let’s break down each box on the second row in the above diagram. Note that the sizes of the boxes are not to scale. Additionally, we are going to focus on the mouse for simplicity, but everything below applies to any USB peripheral connected to the PC.

- Mouse HW - This is defined as the first electrical contact when the mouse is ready to send the event down the wire. In the mouse, there are a few routines (such as debounce) that add latency to your mouse button press. Note that debounce routines are important and prevent the mouse from clicking when you don’t want it to. These extra clicks are often referred to as double clicks - when two clicks are sent instead of one because the debounce routine was too aggressive. So latency isn’t the only critical attribute of your mouse’s performance.

- Mouse USB HW - Once debounce is finished, the mouse has to wait on the next poll to send the packets down the wire. This time is reflected in USB HW.

- Mouse USB SW - Mouse USB SW is the time the OS and mouse driver take to handle the USB packet.

- Sampling - Clicks come into the OS based on the mouse’s polling rate, at which point they may have to wait for the next opportunity to be sampled by the game. That waiting time is called sampling latency. This latency can grow or shrink based on your CPU framerate.

- Simulation - Games constantly have to update the state of the world. This update is often called Simulation. Simulation includes things like updating animations, the game state, and changes due to player input. Simulation is where your mouse inputs are applied to the game state.

- Render Submission - As the simulation figures out where to place things in the next frame, it will start to send rendering work to the graphics API runtime. The runtime in-turn hands off rendering commands to the graphics driver.

- Graphics Driver - The graphics driver is responsible for communicating with the GPU and sending it groupings of commands. Depending on the graphics API, the driver may do this grouping for the developer, or the developer may be responsible for grouping rendering work.

- Render Queue - Once the driver submits the work for the GPU to perform, it enters the render queue. The render queue is designed to keep the GPU constantly fed by always having work buffered for the GPU to do. This helps to maximize FPS (throughput), but can introduce latency.

- Render - The time it takes for the GPU to render all the work associated with a single frame.

- Composition - Depending on your display mode (Fullscreen, Borderless, Windowed), the Desktop Windows Manager (DWM) in the OS has to submit some additional rendering work to composite the rest of the desktop for a particular frame. This can add latency. It’s recommended to always be in exclusive fullscreen mode to minimize compositing latency!

- Scanout - Once composition completes, the final frame buffer is ready to be displayed. The GPU then signals that the frame buffer is ready for the display and changes which frame buffer is being read from for scanout. If VSYNC is on, this “flip” in framebuffers can stall due to having to wait for the VSYNC of the display. Once ready, the GPU feeds the next frame to the display, line by line, based on the refresh rate (Hz) of the display. Given the fact that scanout is a function of the refresh rate, we include it in “Display Latency”.

- Display Processing - Display processing is the time the display takes to process the incoming frame (scanlines) and initiate the pixel response.

- Pixel Response - This is the time it takes a pixel to change from one color to the next. Since pixels are actual liquid crystals, it takes time for them to change. Pixel response times can vary based on the intensity of the required change, and will also depend on the panel technology.

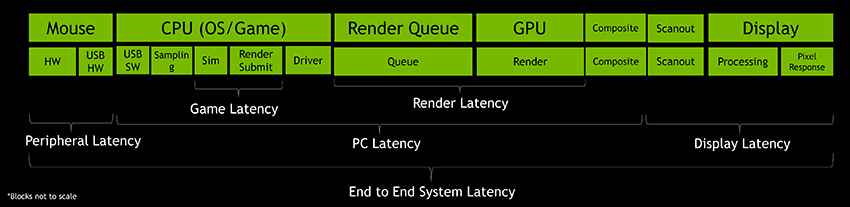

GPU-Bound Latency Pipeline

Now that we know how a click gets to the screen, let’s dig into performance. When profiling games, we often attempt to characterize performance as GPU or CPU-bound. This is extremely helpful for understanding system performance, but in the real world, games often switch back and forth.

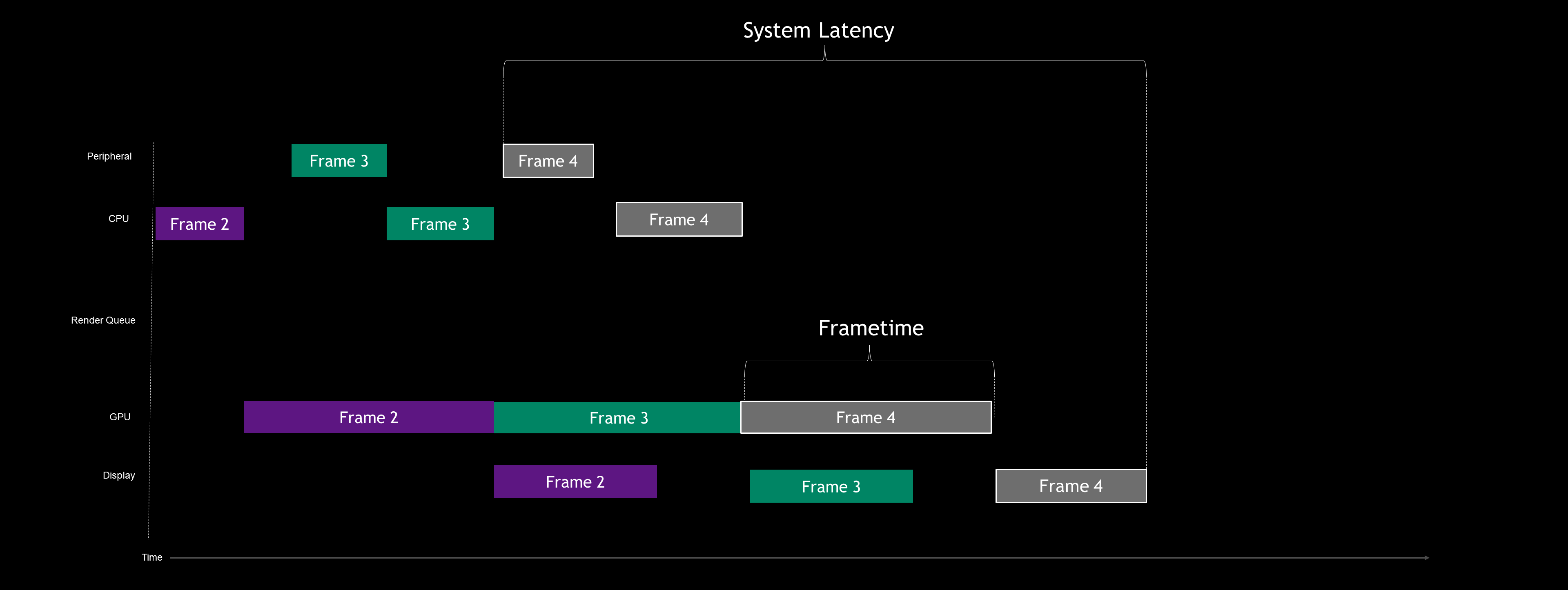

Let’s start with the GPU-bound case when VSYNC is off.

In this example, we are simplifying the pipeline down to 5 major stages: Peripheral, CPU, Render Queue, GPU, and Display.

Let’s inspect Frame 4 and look into what is going on at each stage:

- Peripheral - Mouse input or keyboard input can start whenever; it’s up to the user. In this example, the mouse was clicked before the CPU was ready to accept the input, so the input event just waits. It’s like arriving at the train station and waiting for the next train.

- CPU - The start of the CPU (Simulation) typically begins after what is called a Present block finishes. In the GPU-bound case, the CPU executes its work faster, meaning that it can run ahead of the GPU. However, in most graphics APIs (DX11, DX12, Vulkan, etc) the number of frames that the CPU render submission thread can run ahead is limited. In the case above, the CPU is allowed to run two frames ahead. The CPU section finishes when the driver is done submitting work to the GPU. In reality, there is overlap with the render queue, but we will get into that later.

- Render Queue - Think of this like any other line or queue. The first one to get in the line is the first one out. If the GPU is working on the previous frame when it comes time for the CPU to submit more work, the CPU places that rendering work into the render queue. This queue can be helpful for making sure the GPU is constantly fed and can help smooth out frametimes, however it can add a significant amount of latency.

- GPU - This is the actual GPU rendering of the frame. In the GPU-bound case, work is back to back as the GPU is the component that is the bottleneck.

- Display - This is the case where VSYNC is off. When the GPU finishes rendering, it immediately scans out the new buffer regardless of where the display is in it’s scan process. This creates tearing, but is often preferred by gamers because it provides the lowest latency. Stay tuned for an article on VSYNC and G-SYNC in the future.

Alright, now that we understand what’s going on here, we can see that we are GPU bottlenecked, causing the render queue to pile up and the CPU to run ahead. In the image above, we can see our frametime is how we measure FPS. A faster GPU would produce a higher framerate in this case.

Additionally, we can see the system latency, starting from when the mouse was first clicked to when the display finished. Latency is typically higher in GPU-bound cases because of the render queue and the game running ahead of the Present block and generating new frames which it will be delayed in submitting

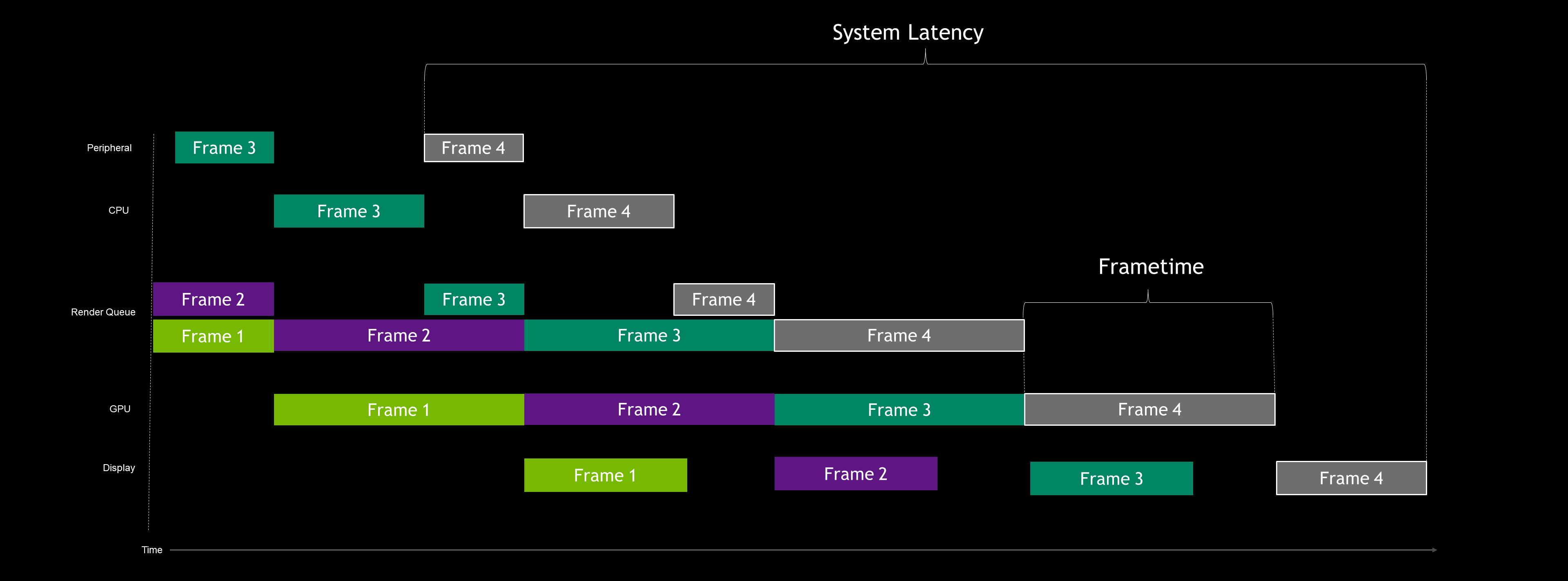

NVIDIA Reflex SDK Latency Pipeline

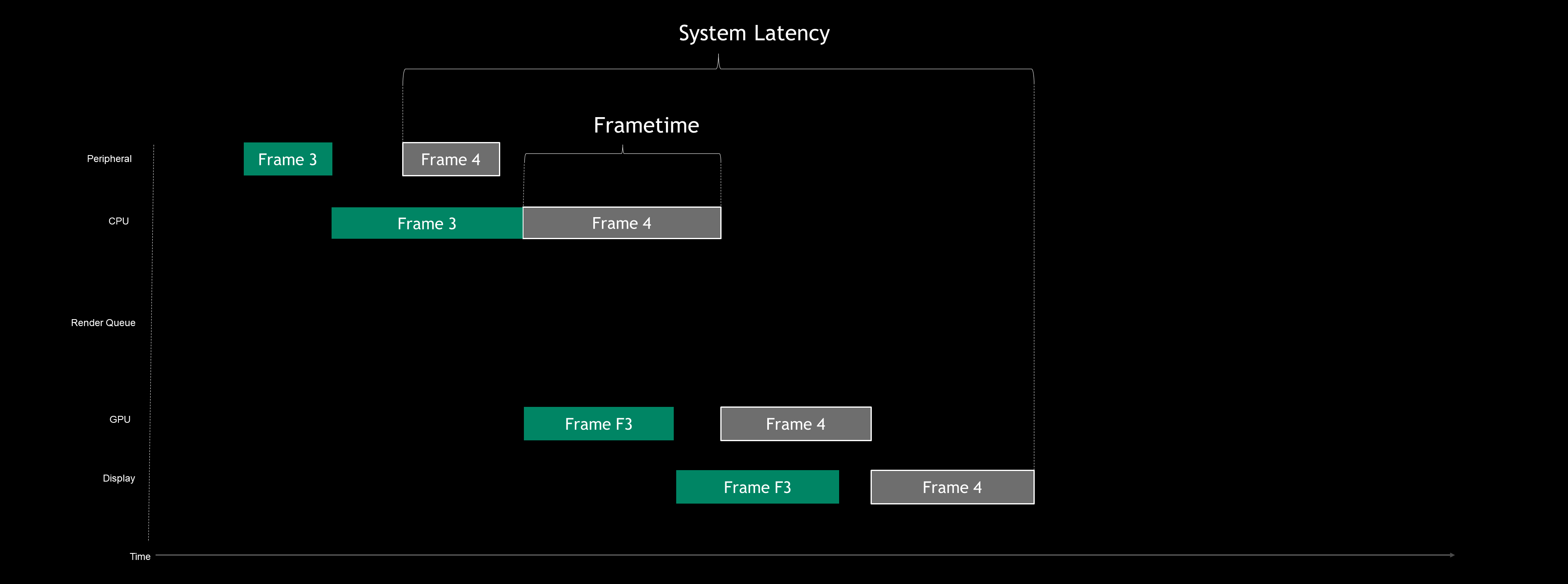

Now, let’s take a look at what the NVIDIA Reflex SDK does to the GPU-bound pipeline:

As you can see, the render queue has pretty much disappeared. The Reflex SDK doesn’t disable it though, it just empties it. But how does this work?

Essentially, the game is able to better pace the CPU such that it can’t run ahead. In addition, it’s allowed to submit work to the GPU just in time for the GPU to start working without any idle gaps in the GPU work pipeline. Also, by starting the CPU work later, it provides opportunities for inputs to be sampled at the last possible millisecond, further reducing latency.

Additionally, when the render queue is reduced with the method used by the SDK, game latency also starts to shrink. This savings is due to the reduction in back pressure that is caused by the render queue in GPU-bound scenarios.

For those of you who have optimized for latency before, this is like using a good in-game framerate limiter to reduce latency. Good in-game frame rate limiters will stall the game in the right spots, allowing for lower latency and reduction of backpressure on the CPU.

However, with NVIDIA Reflex, instead of being locked to a particular framerate, your framerate can run faster than your limit, further reducing your latency. You can think of it as a “dynamic” framerate limiter that keeps you in the latency sweet spot at all times.

CPU-Bound Latency Pipeline

When using Reflex Low Latency mode in a GPU-bound case, the pipeline behaves as though it’s CPU-bound even while the GPU remains fully saturated and utilized. Let’s take a look at what an actual CPU bound pipeline looks like.

As you can see in this chart, the framerate is limited by the CPU. Since the CPU can’t run ahead of the GPU, there is no render queue in this case either. In general, being CPU bound is a lower latency state than being GPU bound.

In this case, a faster GPU won’t yield any additional FPS, but it will reduce your latency. When VSYNC is off or when G-SYNC is enabled, a faster GPU means that the rendered image can be sent to the display faster.

If you have ever wondered why lowering your settings makes the game feel more responsive, this is why. Reducing settings can often create a CPU-bound scenario (eliminating the queue) and simultaneously reduces the GPU render time, further reducing latency.

With the Reflex Low Latency mode, gamers don’t have to default to turning their settings all the way down. Since we can effectively reduce the render queue, extra rendering work only adds to the GPU render time.

Additionally, even if you are CPU-bound, the Reflex Low Latency mode also has a Boost setting that disables power saving features in favor of slightly reducing latency. In the CPU-bound cases where GPU utilization is low, GPU clocks are kept high to speed up processing such that a frame can be delivered to the display as soon as possible. Generally, this Boost setting will provide a very modest benefit, but can help squeeze every last millisecond of latency out of the pipeline.

Diving Into PC Latency and Overlap

Ready to go one level deeper? Let’s take a look at a single frame, but this time looking at the pipeline with full overlap.

As you can see, the majority of the overlap happens in the core of PC Latency between simulation and the GPU render being complete. But why does this exist?

Frames are rendered in small pieces of work called drawcalls. These calls are eventually grouped together into packets of work. Those packets of work are then sent by the graphics driver to the GPU to be rendered. This allows each of the stages to start working before the prior stage has finished - splitting up the frame into bite-sized pieces.

As work makes it way through the pipeline, it eventually gets written to the frame buffer. This continues until the frame is fully rendered. Once it’s done rendering, the back buffer is swapped with another available buffer in the swap chain and sent to scan out.

This is important to understand when looking at rendering latency and game latency. Often game latency and render latency overlap, which means simply adding them together won’t produce a correct latency sum.

Wrapping Up

System Latency is both the quantitative measurement of how your game feels and the key factor impacting players’ aiming precision in first person shooters. NVIDIA Reflex enables developers and players to optimize for system latency and provides the ability to easily measure system latency for the first time.

To summarize, NVIDIA Reflex offers a full suite of latency technology:

- Low Latency Tech:

- NVIDIA Reflex SDK - Developer SDK used to enable NVIDIA Reflex Low Latency for lower latency in GPU-intensive scenarios

- Latency Optimized Driver Control Panel Settings - Enhanced “Prefer maximum performance” mode and “Ultra Low Latency” mode

- GeForce Experience Performance Tuning - Automatic tuner for 1-click GPU overclocking

- Latency Measurement Tools:

- NVIDIA Reflex SDK metrics - Game and render latency markers enable developers to show latency metrics in game

- NVIDIA Reflex Latency Analyzer - New feature of 360Hz G-SYNC displays that enables full end-to-end System Latency measurement for the first time

- GeForce Experience Performance Monitoring - Side bar and in-game overlay that displays real-time performance metrics, including latency

We are excited to bring you NVIDIA Reflex, and to help you obtain a more responsive gaming experience. At NVIDIA, we are laser-focused on reducing latency and will continue to refine NVIDIA Reflex and expand our partner ecosystem.

We would love to hear your feedback! Head on over to the Reflex community forum to chat about latency, or ask questions about the NVIDIA Reflex suite of technologies.

Driver support for NVIDIA Reflex low latency mode will be available with the September 17th Game Ready Driver, and partners will be adding game support to their titles throughout this year. 360Hz NVIDIA G-SYNC gaming monitors will be available starting this Fall from ACER, Alienware, ASUS and MSI.