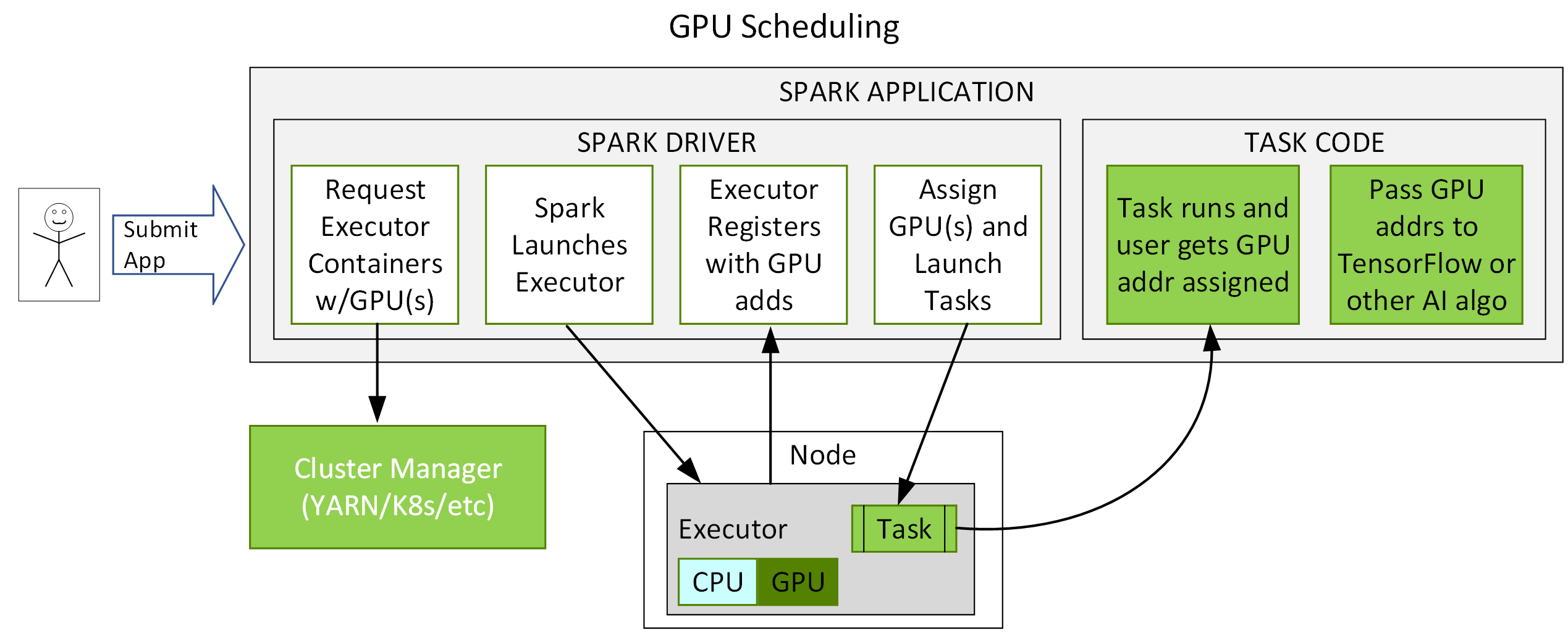

Spark 3.x adds integration with the YARN, Kubernetes, and Standalone cluster managers to request GPUs and plugin points, which can be extended to run operations on GPUs. For Kubernetes, Spark 3.x offers GPU isolation at the executor pod level. This makes GPUs easier to request and use for Spark application developers, allows for closer integration with DL and AI frameworks like Horovod and TensorFlow on Spark, and allows for better utilization of GPUs.

An example of a flow for GPU scheduling is shown in the diagram below. The user submits an application with a GPU resource configuration discovery script. Spark starts the driver, which uses the configuration to pass on to the cluster manager, to request a container with a specified amount of resources and GPUs. The cluster manager returns the container. Spark launches the container. When the executor starts, it will run the discovery script. Spark sends that information back to the driver and the driver can then use that information to schedule tasks to GPUs.

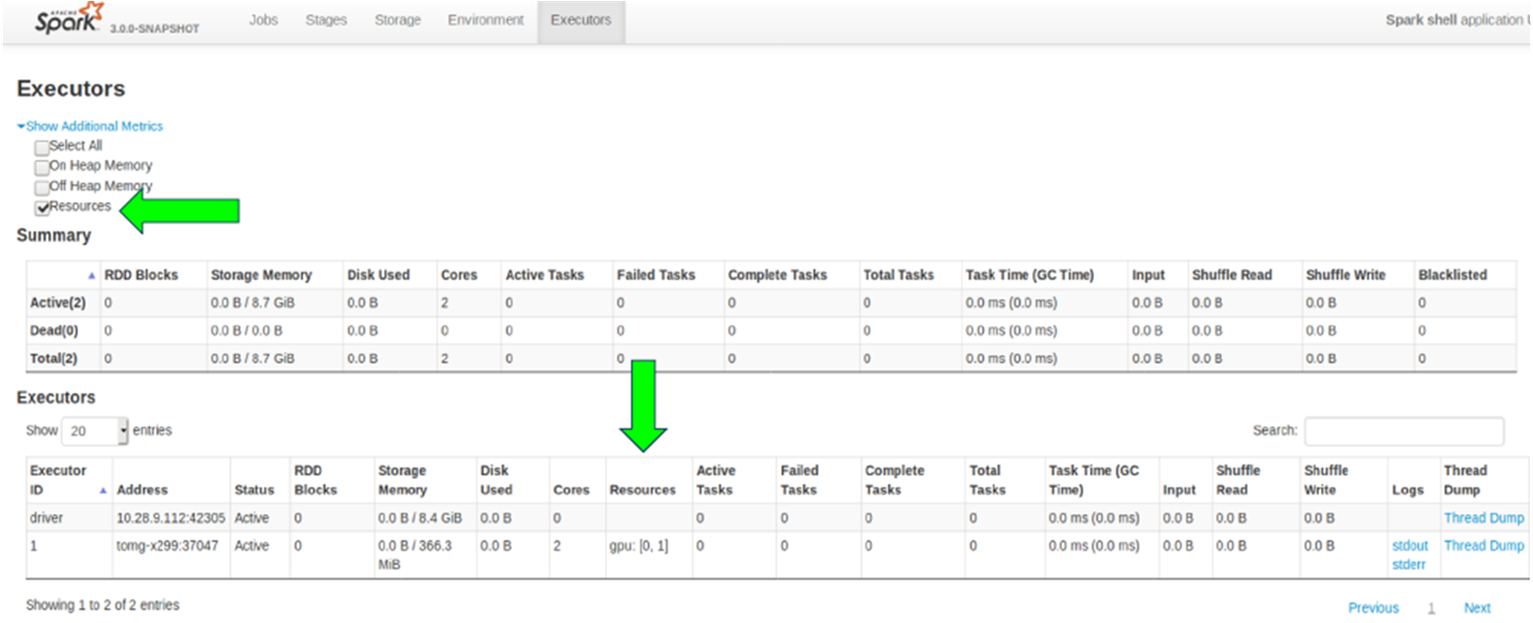

The Spark Web UI has been modified with a new checkbox to see which resources have been allocated. In this instance, two GPUs have been allocated.

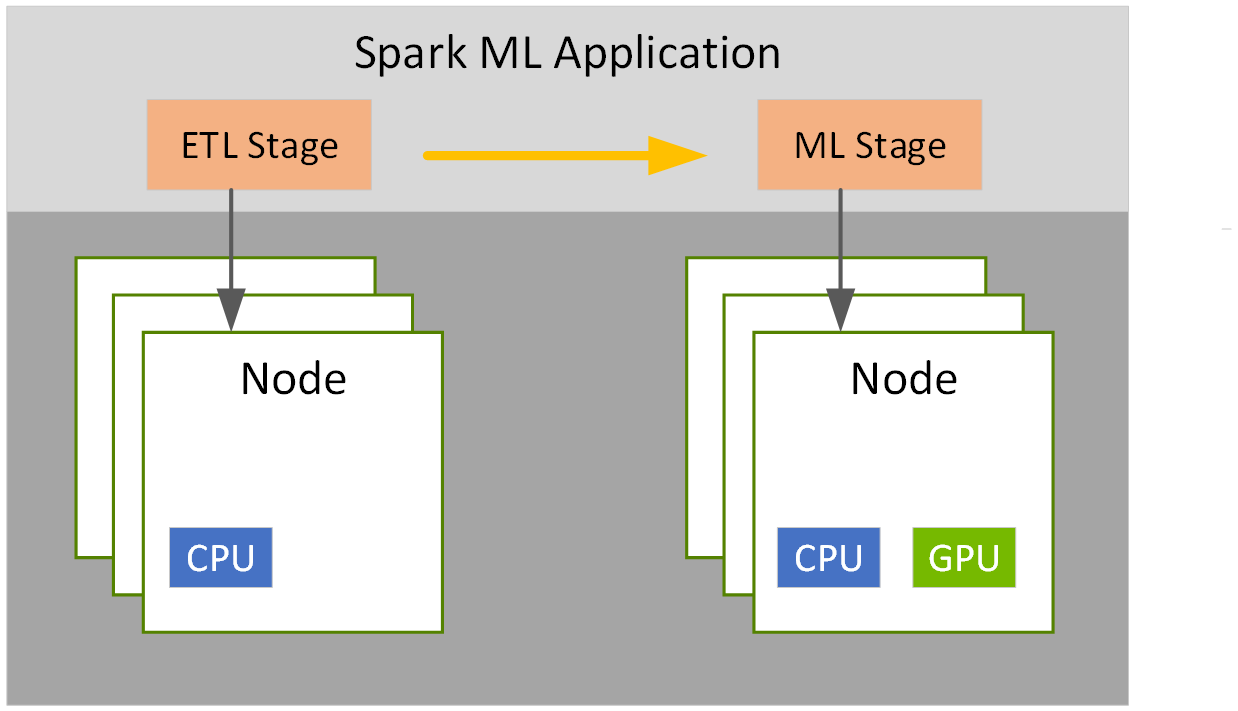

Spark 3.x stage level resource scheduling allows you to choose one container size for one stage and another size for another stage. For example, one for ETL and another for ML.